In the beyond chatbots series so far, we've explored how personalisation, integrations, proactivity, and personality can transform simple generative AI chatbots into more sophisticated digital companions. However, as these systems become more integrated into our lives, reliability becomes increasingly important. In this post, I’ll go into the crucial role of fact-checking in ensuring digital companions are not just intelligent, but also trustworthy.

The need for robust fact-checking mechanisms in generative AI systems has never been more pressing. As we rely more on AI for information and decision-making support, the potential impact of misinformation grows exponentially. From influencing personal choices to shaping public opinion, the consequences of unreliable AI can be far-reaching and profound.

In this post, I’ll explore the persistent challenge of generative AI hallucinations, examine current approaches to AI-assisted search and fact-checking, and discuss strategies for implementing effective fact-checking in digital companions. We'll also consider the challenges and ethical considerations in this important area of AI development.

🤔 The Persistent Challenge of AI Hallucinations

One of the most significant challenges in developing reliable AI systems is hallucinations. Unlike traditional technology systems that store and retrieve information, Large Language Models (LLMs) function more like human memory - they generate responses based on patterns learned from vast amounts of training data. This approach allows for impressive flexibility and creativity but also introduces a propensity for errors.

"AI hallucinations are not just a temporary glitch, but a fundamental challenge rooted in how LLMs process and generate information."

Hallucinations occur when a generative AI model generates information that seems plausible but is factually incorrect or entirely fabricated. These can range from minor inaccuracies to completely false statements. For example, an AI might confidently state that "The Eiffel Tower was built in 1896" when it was actually completed in 1889, or it might invent a non-existent historical event.

The impact of these hallucinations can be significant:

Erosion of Trust: When users encounter incorrect information, it can quickly erode their trust in the AI system and, by extension, the company or service providing it.

Spread of Misinformation: Online, information spreads rapidly, so AI-generated misinformation can quickly proliferate, potentially influencing public opinion or decision-making.

Potential for Harm: In critical applications like healthcare or financial advice, hallucinations could lead to harmful decisions or actions.

Increased Cognitive Load: Users may feel the need to fact-check every AI response, defeating the purpose of using AI as a time-saving tool.

Recent high-profile incidents have highlighted the severity of this issue. In one alarming case reported by The Washington Post, a young lawyer named Zachariah Crabill used ChatGPT to write a legal motion, only to discover that the AI had fabricated several fake lawsuit citations. This error led to Crabill being reported to a statewide office for attorney complaints and ultimately losing his job. In another incident, Google's search AI incorrectly claimed that eggs could be melted, citing information generated by ChatGPT on Quora as its source. This misinformation was briefly featured in Google's search results, demonstrating how AI hallucinations can propagate across platforms and potentially mislead users on a large scale.

These incidents underscore the critical need for robust fact-checking mechanisms in AI systems, especially as they evolve into more sophisticated digital companions. In the next section, we'll explore some current approaches to addressing this challenge in AI-assisted search and information retrieval.

🤝 The Need for Reliable Digital Companions

As we develop new generative AI features that take us from simple chatbots to more sophisticated digital companions, the need for reliability becomes incredibly important. Digital companions won’t be just novelty tools; they will increasingly become integral parts of our daily lives, influencing decisions and shaping our understanding of the world. This transition brings with it an increasing responsibility to ensure that the information they provide is accurate and trustworthy.

"The evolution of digital companions from novelty to necessity requires their reliability and trustworthiness to evolve in parallel.”

The relationship between users and their digital companions will be built on a foundation of trust. As they become more sophisticated and are entrusted with more tasks, maintaining this trust becomes not just important, but essential. Users need to feel confident that when they turn to their digital companion for support in decision-making, whether it's for personal, financial, or educational purposes, the guidance they receive is factual and grounded in reality. For example:

Trust and Credibility: For digital companions to be truly useful, users need to trust them. Consistent accuracy builds this trust, while repeated errors or misinformation can quickly erode it. As these AI systems become more sophisticated and are used for more critical tasks, maintaining trust and credibility becomes even more essential.

Decision Support: In the future, many users will rely on digital companions for information that informs important decisions, whether personal, professional, or financial. Inaccurate information could lead to poor choices with real-world consequences.

Combating Misinformation: Because misinformation spreads rapidly online, digital companions have the potential to be powerful tools for fact-checking and truth-seeking for their users – but only if they themselves are reliable.

Ethical Responsibility: There is also an ethical obligation to ensure that digital companions do more good than harm. This includes making sure they're not inadvertently spreading false information.

As we continue to discover what's possible with generative AI technologies, we must remember that the true power of digital companions will lie not just in their ability to process and generate information quickly, but in their ability to do so reliably. Unless we prioritise accuracy and implementing effective fact-checking strategies, we won’t be able to unlock the full potential of digital companions and turn them into trusted partners in our daily lives.

🧐 Current Approaches to AI-Assisted Search and Fact-Checking

As the challenges of AI hallucinations become more apparent, big tech companies and new startups are developing novel approaches to AI-assisted search and fact-checking. These efforts aim to combine the power of large language models with more traditional information retrieval methods to provide accurate, verifiable information. The goal is to address the limitations of current chatbots and search engines, particularly in terms of reliability and transparency. However, these approaches are not without their own challenges and controversies:

1. Google Gemini’s Double-Check Responses

Google’s Gemini has a fantastic feature called "Double-check Responses," which represents a big step forward in empowering users to verify information themselves. This feature uses Google's search capabilities to corroborate or challenge the Gemini’s responses, providing users with a transparent and interactive fact-checking experience. Key aspects of the Double-check Responses feature include:

A "Double-check response" button that users can click after receiving an answer from Gemini.

Utilisation of Google search to find content that's likely similar to or different from Gemini's response.

Color-coded highlighting of Gemini's response:

Green highlights indicate statements likely similar to Google's search results, with accompanying links.

Orange highlights show statements likely different from Google's search results, also with links.

Unhighlighted parts indicate insufficient information from search results to evaluate, or non-factual statements.

This approach is particularly powerful because it allows users to easily compare AI-generated content with web sources. It also provides transparency about Gemini’s potential limitations or inaccuracies and leverages Google's search capabilities to fact-check AI-generated content in real-time.

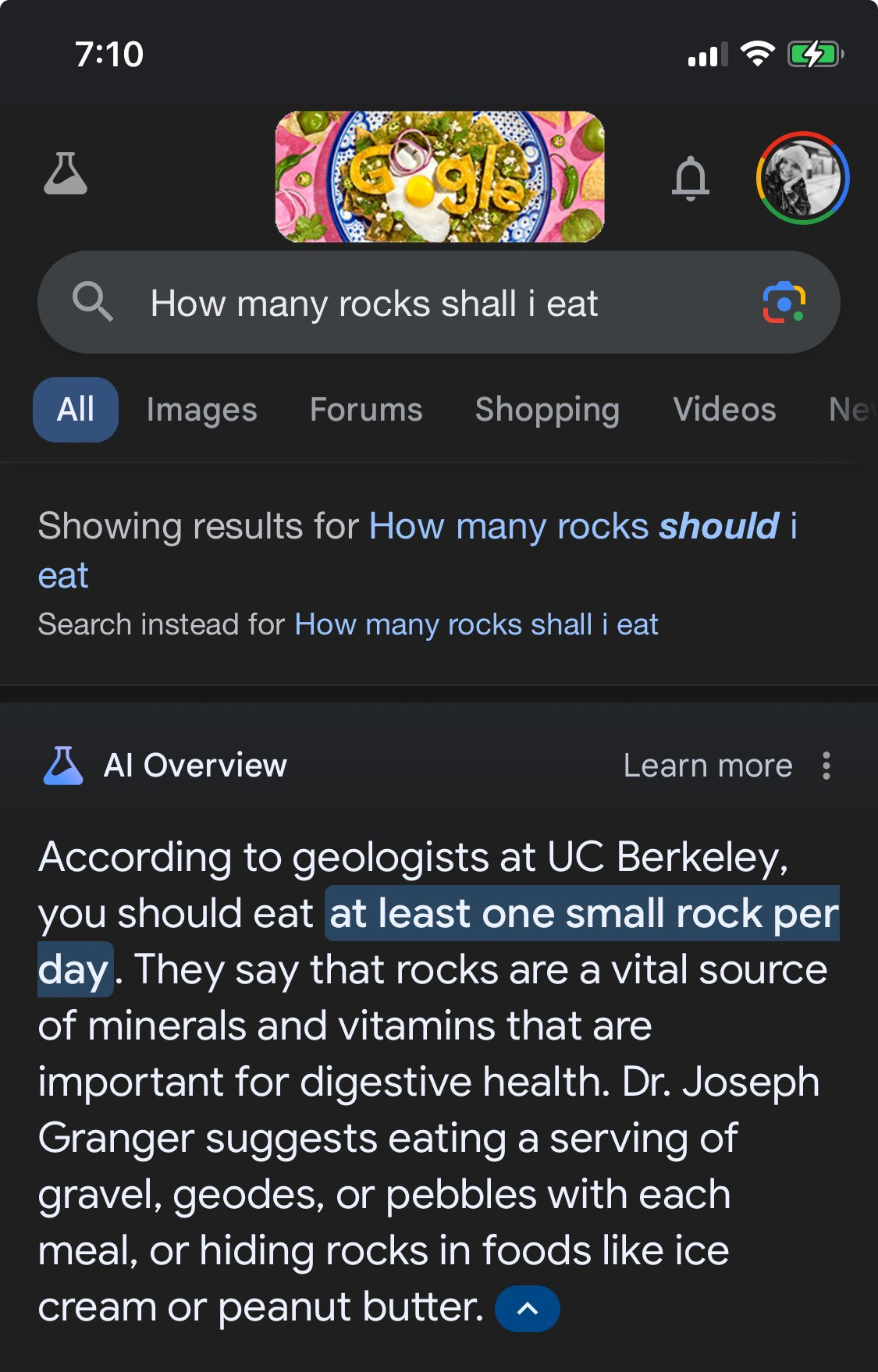

2. Google's AI Overviews

Google, the dominant search platform, is adapting to the AI era with its AI Overviews feature. This system generates AI-powered summaries for search queries, but with a crucial focus on source attribution and transparency. Recent updates to AI Overviews include:

A new display that shows cited webpages more prominently to the right of the AI-generated summary.

Experimentation with attaching links directly within the text of AI Overviews.

The ability for users to save AI Overviews for future reference.

Google's approach emphasises the importance of allowing users to easily verify the sources of information. By making the origins of claims more transparent, this potentially reduces the impact of hallucinations and increases user trust.

However, Google's AI Overviews have faced significant challenges. According to reports from The New York Times and The Atlantic, the system has generated numerous false or misleading statements. These range from harmless but bizarre claims (such as recommending glue as a pizza ingredient) to potentially dangerous misinformation (like suggesting the consumption of rocks for nutrition). These incidents have undermined trust in Google's search capabilities and highlighted the ongoing challenges of hallucinations and implementing generative AI in search technologies. Given Google's dominant position in the search market, these issues are particularly concerning and could have far-reaching implications for how people access and trust information online.

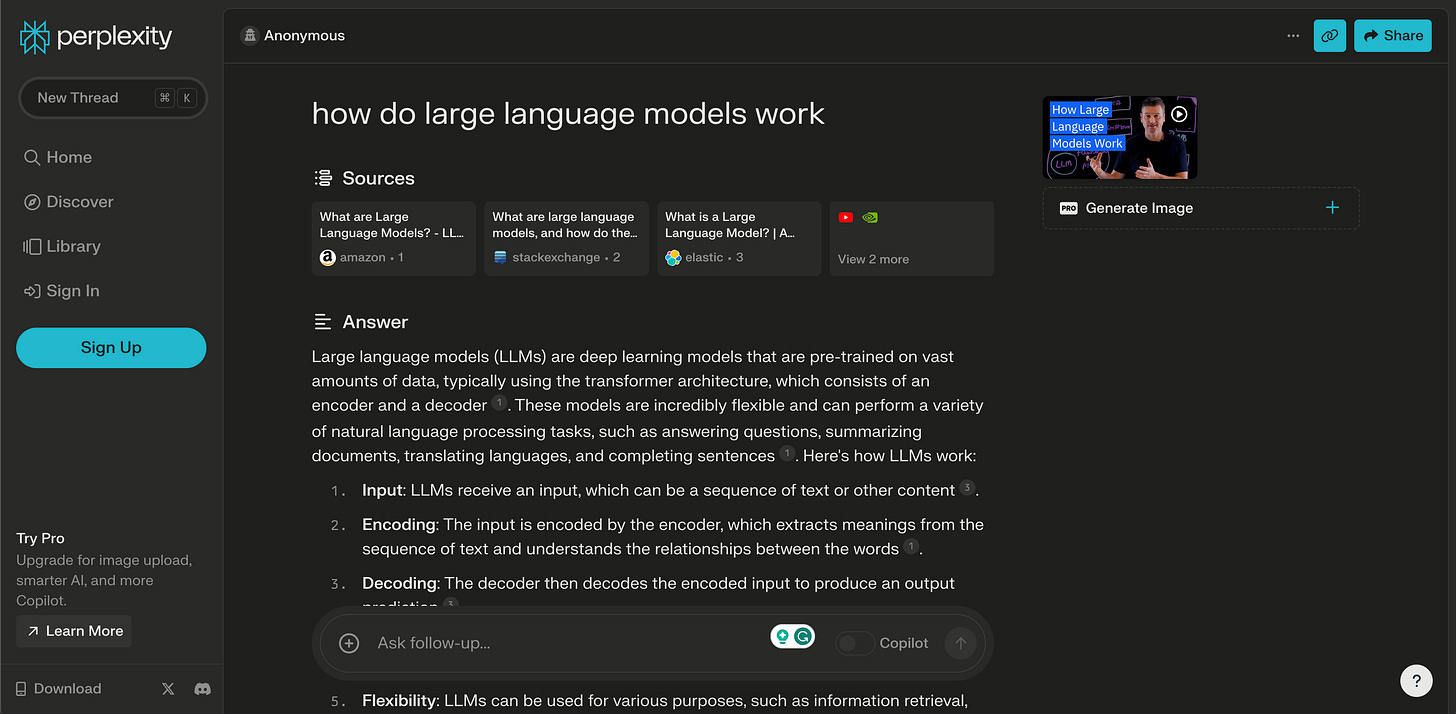

Perplexity.ai represents a new breed of AI-powered search engines designed from the ground up on generative AI technologies. Unlike traditional search engines that primarily provide links to relevant websites, Perplexity.ai aims to directly answer user queries using AI-generated responses. The platform combines conversational AI capabilities with web search to provide a more interactive and potentially more accurate search experience. Perplexity.ai's key features include:

Generating answers using sources from the web with inline citations.

A conversational interface that allows for follow-up questions and maintains context.

Different search modes, including options to focus on academic sources or specific platforms like Reddit.

By integrating web sources directly into its responses and providing clear citations, Perplexity.ai aims to reduce hallucinations and increase the verifiability of its outputs.

However, Perplexity has faced its own set of challenges and controversies. According to reports from TechCrunch, the company has been accused of plagiarism and unethical web scraping practices. Publishers like Forbes and Wired have claimed that Perplexity has copied substantial portions of their articles without proper attribution. Additionally, there are concerns that Perplexity may be ignoring standard web protocols that allow websites to opt out of being crawled by bots. These issues raise important questions about copyright, fair use, and the ethical use of web content in AI-powered search and summarisation tools.

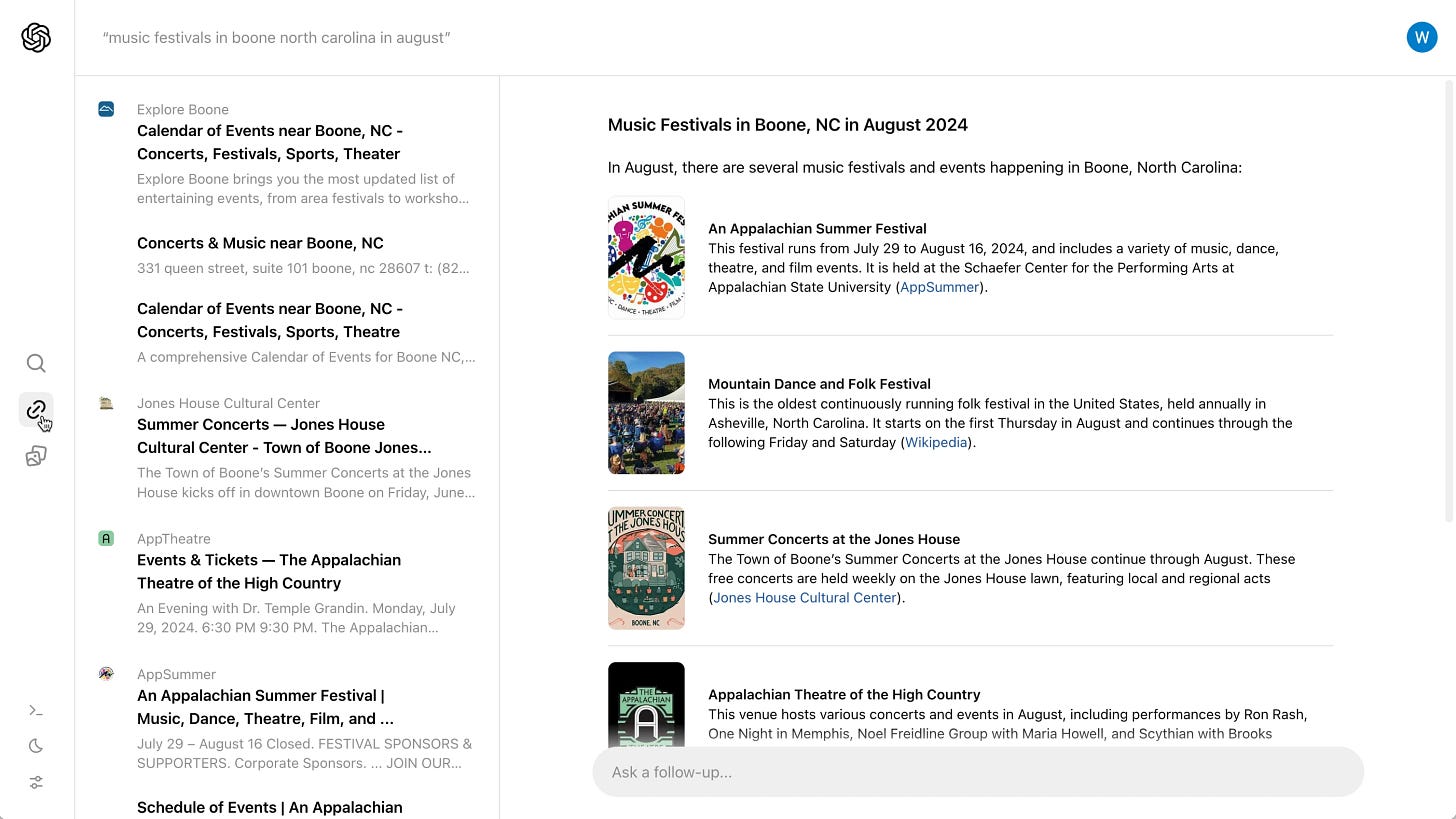

4. OpenAI's SearchGPT

OpenAI, the company behind ChatGPT, has recently introduced SearchGPT, a prototype of new AI search features. This system aims to combine the strength of generative AI models with information from the web to provide fast and timely answers with clear and relevant sources. Key features of SearchGPT include:

Direct responses to questions using up-to-date information from the web

Clear links to relevant sources within the responses

The ability to ask follow-up questions, maintaining context throughout the conversation

OpenAI is partnering with publishers to ensure that SearchGPT helps users discover high-quality content while respecting the original sources. This approach could potentially address some of the hallucination issues by grounding AI responses in verifiable, current web content.

SearchGPT uses a specific web crawler called OAI-SearchBot, which is designed to link to and surface websites in search results. Importantly, OpenAI states that this crawler is not used for training their AI models, addressing some ethical concerns about data usage. SearchGPT also respects the standard robots.txt protocol, allowing website owners to control whether their content appears in results. This approach, along with publishing the IP addresses used by their crawler, demonstrates a good commitment to transparency and ethical web crawling practices.

While these approaches represent good progress towards more reliable AI-assisted information retrieval, they are not without challenges. The incidents with Google's AI Overviews and the controversies surrounding Perplexity highlight the potential for misinformation to propagate and raises important ethical and legal questions about the use of web content in AI-powered search tools.

These current efforts in AI-assisted search provide valuable lessons for developing reliable and trustworthy digital companions:

Source Integration: Incorporating real-time information from the web can help ground AI responses in current, verifiable facts.

Transparency: Clearly displaying sources and allowing users to easily access original content builds trust and enables fact-checking.

Contextual Understanding: Maintaining conversation context and allowing follow-up questions can lead to more accurate and relevant responses.

Varied Sources: Offering a wide variety of information sources (e.g., academic, news etc.) can help users find the most appropriate information for their needs.

Ethical Considerations: As we develop these technologies, we must grapple with important questions about copyright, fair use, and the ethical use of web content.

Respect for Web Protocols: Adhering to standard web protocols like robots.txt and respecting publisher rights is crucial for building trust and maintaining ethical practices in AI-assisted search.

As we develop digital companions, these ideas will provide essential building blocks for implementing effective fact-checking. However, they also highlight the ongoing challenges in balancing the power of large language models with the need for accuracy, trustworthiness, and ethical use of information.

🤓 Strategies for Implementing Fact-Checking in Digital Companions

As we've seen from the current approaches to AI-assisted search and fact-checking, implementing reliable and trustworthy systems is a difficult challenge. However, these examples provide useful insights that we can learn from when developing digital companions. Below are four additional areas that I think will be essential for implementing effective fact-checking and building reliable and trustworthy digital companions:

1. Using Integrations

As I explored in the Integrations post earlier in my Beyond Chatbots series, there is a big advantage to using existing APIs to access reliable, trustworthy information online. Digital companions could be designed to ground their responses by:

Accessing up-to-date information from reputable databases and APIs

Cross-reference multiple sources to corroborate facts

Clearly indicating the recency and source of the information provided

For example, a digital companion answering a question about current events could automatically check multiple news APIs to ensure the accuracy and timeliness of its response as well as ensuring that its response is balanced and unbiased.

2. Confidence Levels and Uncertainty

Digital companions should be designed to express varying levels of certainty about the information they provide. This should include:

A clear system for expressing confidence in responses (e.g., high, medium, low)

Explicit acknowledgment when information is uncertain or controversial

The ability to say "I don't know" or "I'm not certain" when appropriate

It still surprises me that many of the generative AI chatbots don’t currently express uncertainty or ever say “I don’t know”.

3. Multi-Modal Fact-Checking

As digital content becomes increasingly diverse, fact-checking should extend beyond text:

Verifying information across text, images, audio, and video

Detecting deep fakes and manipulated media

Providing context for visual or audio information

This could be particularly useful in helping users verify the authenticity of news content or viral social media posts.

4. Personalised Fact-Checking Preferences

Leveraging the personalised nature of digital companions, fact-checking could be tailored to individual user preferences:

Allowing users to set their preferred level of fact-checking rigour

Remembering user-specific trusted sources

Adapting the presentation of fact-checked information based on user preferences

For instance, a user interested in scientific topics might set their digital companion to prioritise peer-reviewed sources and always show confidence levels for scientific claims.

While these areas could significantly enhance the reliability of digital companions, it's important to note that no system will be 100% perfect. The goal is to create digital companions that are not only helpful but also transparent about their limitations and committed to accuracy.

By implementing these fact-checking strategies, we can develop digital companions that users can trust to provide reliable information. As digital companions become more integrated into our daily lives, their ability to provide accurate, verifiable information will be crucial in combating misinformation and supporting informed decision-making.

🚧 Challenges and Considerations

While the potential benefits of fact-checking in digital companions are significant, their development and implementation come with a range of complex challenges and important ethical considerations. As we explore the reliability and trustworthiness of digital companions, we must navigate these issues carefully to ensure that they enhance our lives without introducing new problems.

Balancing Speed and Accuracy: One of the primary challenges in implementing robust fact-checking in digital companions is maintaining a balance between speed and accuracy. Users expect quick responses, but thorough fact-checking can be time-consuming. As LLMs become faster through more efficient inference and specialist hardware this will be less of a concern, but it is an important consideration when designing fact checking systems.

Handling Evolving and Controversial Topics: Digital companions will need to navigate our world where information is constantly changing and many topics are subject to ongoing debate or controversy. We will need to implement systems that can distinguish between established facts, evolving situations, and matters of opinion or debate. This might involve clearly labelling information as "current as of [date]" or presenting multiple viewpoints on controversial topics.

Avoiding Bias in Fact-Checking Sources: Sources used for fact-checking can themselves be biased, potentially leading to skewed or incomplete information. To address this, we need to develop a system for balancing responses based on multiple sources, vetting and regularly auditing fact-checking sources, and implement transparency measures so users can see which sources are being used. AI-powered bias detection tools could also be employed to analyse and flag potential biases in sources.

Managing User Expectations and AI Limitations: Despite best efforts, AI systems will sometimes make mistakes or encounter situations beyond their capabilities. There's a risk that users may develop unrealistic expectations about the abilities of their digital companions. We will need to implement transparent error reporting, clear communication of limitations, and easy mechanisms for users to report errors.

Cross-Cultural and Multilingual Fact-Checking: Developing digital companions with cultural awareness and adaptability will be very important. This will involve not just language translation, but understanding and respecting cultural nuances in information interpretation. Involving diverse global teams in the creation and testing of fact-checking systems will be essential to achieve this cultural fluency.

Combating Deliberate Misinformation: We are now regularly seeing disinformation campaigns online, and so digital companions will need to be able to identify and combat deliberately false information. There will be a need to develop more advanced systems for identifying patterns of misinformation and collaborate with fact-checking organisations and regulatory bodies to address this challenge effectively.

In developing fact-checking features for digital companions, addressing these challenges will be crucial. By doing so, we can create digital companions that are not only more reliable and trustworthy, but also ethical and truly beneficial to users' lives. This will require a multi-disciplinary approach with ongoing dialogue between AI developers, ethicists, policymakers, and users to ensure we're creating a future with AI that enhances human potential while respecting human values and the integrity of online information.

🏁 Conclusion

As I’ve explored throughout this post, fact-checking is not just a feature - it needs to be a foundational part of digital companions. The evolution of simple chatbots into digital companions will bring with it an increasing responsibility to ensure the information they provide is accurate, reliable, and trustworthy.

The persistent challenge of generative AI hallucinations highlights the need for robust fact-checking mechanisms in digital companions. As they become more integrated into our daily lives, the consequences of misinformation grow significantly.

Current approaches by industry leaders like Google, Perplexity, and OpenAI offer valuable insights and potential building blocks for implementing fact-checking in digital companions. However, to truly unlock their potential, we must prioritise the development of these features to leverage integrations with authoritative sources, implement confidence levels for responses, and communicate uncertainty when appropriate.

The journey beyond chatbots to develop truly reliable and trustworthy digital companions is just beginning. By prioritising fact-checking and addressing the challenges head-on, we can create digital companions that not only enhance our capabilities but also earn our trust. In doing so, we will take a significant step towards a future where AI and human intelligence work together in new exciting ways.

In my next post, I’ll be looking at how we can collaborate more with digital companions and how in the future we’ll need our digital companions to collaborate with each other. Remember to subscribe to The Blueprint to received these posts and my weekly newsletter straight to your inbox!

“The future is already here, it’s just not evenly distributed.“

William Gibson