A week in Generative AI: Operator, Stargate & DeepSeek

News for the week ending 26th January 2025

Big Week! We had the anticipated launch of Operator from OpenAI, their first AI agent, the announcement of the $500bn Project Stargate on day two of the new presidential term and a hugely significant open source release from the Chinese company DeepSeek whose R1 model rivals OpenAI’s o1 at a fraction of the cost.

In Ethics News, President Biden’s AI Act was repealed by the incoming administration and the Pentagon said that AI was ‘speeding up its kill chain’ which sounds horrendous.

In Long Reads I highly recommend taking a look at Wired’s article on DeepSeek and also John Gruber’s article on how dumb Siri currently is.

OpenAI launches Operator, an agent that performs tasks autonomously

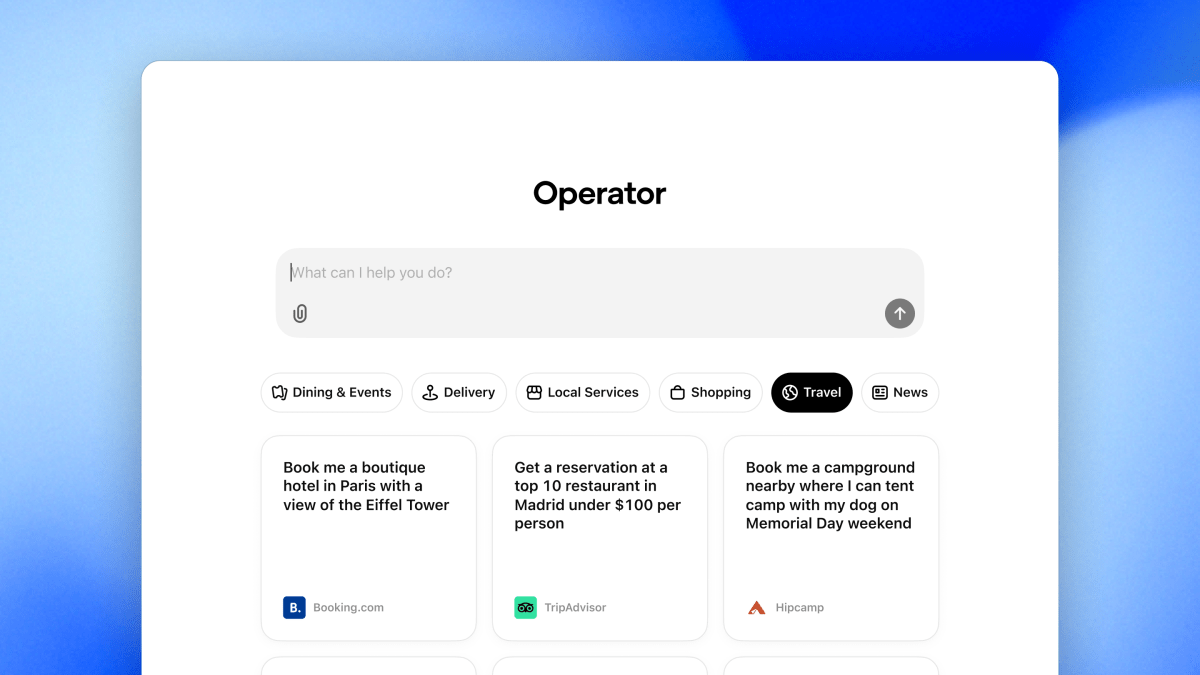

As had been rumoured for a while, OpenAI took the wraps off their first foray into AI agents this week by announcing Operator.

Operator is what OpenAI calls a ‘Computer-Using Agent’ (CUA). It combines GPT-4o’s vision capabilities with the advanced reasoning capabilities seen in models like o1 and o3 to interact with the graphical user interfaces (GUIs) people see on a screen, just like humans do. You can see a demo of Operator in action here.

Operator is currently a research preview and only available to Pro users ($200 per month) in the US, so I haven’t had a chance to test it myself yet. However, the broad consensus I’ve seen is that this is a much more polished version of Anthropic’s Computer Use that they announced back in October.

Conceptually this type of approach is a great idea, and whilst these general use agents can perform a wide variety of tasks (e.g. download files, combine PDFs, process email attachments etc.), they are not yet reliable enough. Based on some of the benchmarks shared, Operator is around 40-60% reliable vs. humans at around 80%. I suspect agents will need to get to 90%+ reliability (i.e. better than human) before people will begin trusting them to perform tasks on their behalf. Having said that, given the progress we’ve seen OpenAI make between the o1 and o3 models I suspect we will see 90%+ reliability in the next 12-18 months.

One other thing worth mentioning is that in the EU, as the laws stand right now, AI agents like Operator will not lawfully be able to help consumers with anything relating to their bank accounts. I’ve written previously about how companies like Stripe are building out the technology to allow AI agents to manage payments, which I think will be a big use case for the technology once it becomes reliable enough.

However, Sam Altman helpfully shared this tweet which details a potted history of how Open Banking came about in the EU and some of the sacrifices made along the way. TLDR is that digital assistants (like Operator) will only be allowed to interact with banking systems in the EU via Open Banking APIs (which is not how they’re currently designed to work).

Project Stargate: a $500bn AI data center company

Rumours about Project Stargate were floating around most of last year and I first wrote about it back in April when it was described as being a $100bn project lead by OpenAI and Microsoft. It seems things have moved on a lot since then with more investors coming on board, meaning that Microsoft will no longer be OpenAI’s exclusive cloud provider.

However, the basic premise of the project hasn’t changed - invest a huge amount of capital in building out a huge amount of AI infrastructure (data centres, power generation etc.) so that the US can take a strategic lead in developing artificial general intelligence.

The Trump administration sees this project as a big proof point for how they will bring more investment to the US and create more jobs, which is why such a splashy announcement was made on just day two of their new presidential term.

Thankfully, reports say that Stargate will use solar and batteries for power, which is surprising given the Trump administration’s involvement in the project. We’ll just have to see how the project progresses over the next 4 years and how much of an environmental impact it has.

Cutting-edge Chinese “reasoning” model rivals OpenAI o1—and it’s free to download

DeepSeek is an interesting Chinese AI company that has been spun out of hedge-fund and has been building some of the most cutting-edge open source AI capabilities over the last couple of years.

There have been lots of sensationalists news stories this week about the launch of their DeepSeek R1 model, which is open source, matches OpenAI’s o1 in capabilities, and was trained at a much lower cost. There’s a good long read from Wired about them here.

Without veering towards the sensationalist, what DeepSeek have achieved is incredibly impressive and challenges a few assumptions that the predominantly US-based AI industry has made:

Firstly, you don’t need access to cutting-edge AI hardware to build cutting-edge AI models. 18 months ago, the US ordered the immediate halt to the export of the most powerful AI chips to China in an attempt to protect their lead in AI. However, this has just forced Chinese AI companies like DeepSeek to innovate and find ways to get more from older AI hardware.

Secondly, there is ‘no moat’ in AI. Early last year it looked like the Open Source community was about 12-18 months behind the frontier AI companies. With the release of Meta’s Llama 3 in the summer, it looked like the Open Source community was about 6 months behind the frontier AI companies. Now, with the release of DeepSeek R1 it looks like the Open Source community is about 3 months behind the frontier AI companies. You can probably see where I’m going with this… If Open Source AI models have similar capabilities to the state-of-the-art frontier models then the technology will quickly become commoditised and it could be difficult to recoup the $tr that have been invested into the frontier AI companies.

Lastly, the most capable AI models don’t need to be expensive. With OpenAI launching a $200 Pro subscription tier late last year, it was starting to look like cutting edge AI models would only be available to those that could afford it, which could set a very dangerous precedent. DeepSeek’s R1 is 98% cheaper to use than OpenAI’s o1, which shows that it is possible to build frontier models that are not only cheaper (and more environmental friendly) to train, but also to use. This is great for everyone.

Inside Anthropic's Race to Build a Smarter Claude and Human-Level AI

Dario Amodei sat down with the Wall Street Journal’s Joanna Stern at Davos this week to discuss the $2bn of additional funding they’re raising to help address the surge in demand they’ve seen for their services over the last year, and specifically the last 3 months.

As a heavy Claude user (my go-to model for most things) this is music to my ears as I’ve been consistently coming up against usage limits recently. It was also great to hear what’s going to coming from Anthropic this year - smarter models, web integration, two-way voice mode, and memory. To be honest, this is everything that’s been on my wish list for a while now so very exciting!

I’m a great admirer of Anthropic and the way they go about business - they’re thoughtful and deliberate in how they release new capabilities/features and this usually results in much higher quality models that I find much nicer to use.

Perplexity has a busy week

It’s been a busy week for Perplexity who launched an API for AI search, launched an assistant for Android, and made a bid to merge with TikTok in the US (although, who didn’t?!).

Perplexity took on new funding at the end of last year, valuing them at $9bn, and also started taking advertising on their platform in the US. It’s interesting to see where they are headed with these new announcements, especially with the API for AI search which looks to address the hallucination issues common with other large language models.

Perplexity’s API, Sonar, allows developers to customise which sources (websites) are used when answering user queries and claims that their Pro tier outperforms leading models from Google, OpenAI, and Anthropic on a benchmark that measures factual correctness in AI chatbot answers, SimpleQA.

Factual correctness and reliability are two sides of the same coin which I think is currently the biggest barrier to frontier AI models having a bigger impact in the real world, so it’s great to see more progress being made on this front.

AI Ethics News

AI benchmarking organization criticized for waiting to disclose funding from OpenAI

Paul McCartney calls on UK government to protect artists from AI

Samsung’s Galaxy S25 will support Content Credentials to identify AI-generated images

UK to unveil ‘Humphrey’ assistant for civil servants with other AI plans to cut bureaucracy

Long Reads

World Economic Forum at Davos - Debating Technology

Wired - How Chinese AI Startup DeepSeek Made a Model that Rivals OpenAI

Daring Fireball - Siri is super dumb and getting dumber

Denis Hassabis - Path to AGI

“The future is already here, it’s just not evenly distributed.“

William Gibson

Do you think the US will ban the new Chinese AI?