Another big week in AI, following last week’s big announcements from OpenAI and Anthropic. It is astounding the pace that OpenAI are currently shipping product, and its difficult to see how their rivals keep up with the pace they’re setting right now!

This week we had OpenAI’s third annual DevDay where they announced Apps in ChatGPT and Agent Builder, alongside lots of other developer focused improvements. There was also the release of the annual State of AI Report for 2025 by Nathan Benaich which has become a bit of a staple in the rhythm of the year. Lastly, Figure announced their third generation humanoid robot which has a great demo, but still a long way to go before they’ll be let loose in the world.

In Web 4.0 news, OpenAI shared that they now have 800m users per week and Sora hit 1m downloads in its first week alone, faster than ChatGPT’s first 1m users. Adobe also predicts we’ll see a 520% growth in AI-assisted online shopping this holiday season.

In Ethics news, there is a good article on the copyright challenges around Sora, OpenAI shared how they are approaching political bias in their models, and the Bank of England warned of the growing risk that the AI bubble could burst.

I highly recommend Stratechery’s Long Read on OpenAI’s Windows Play this week too.

OpenAI DevDay

On Monday OpenAI had their third annual DevDay. There are always two interesting sides to things like DevDay - what a company announces and the narrative they share around the announcements, that tell you more about their long term strategy.

Let’s start with the announcements where there were two major new releases as well as lots of new models that are now available in the API for developers (Sora 2, GPT-5 Pro, GPT Realtime, and Image Mini).

Apps in ChatGPT

For me this is the biggest of the two announcements as for the first time since ChatGPT launched I can start to see how the empty chat interface can become something much richer, more interactive, and useful. This isn’t available to users in the EU or UK yet, so I haven’t had a chance to try them out, but there are now apps inside ChatGPT available from Booking.com, Canva, Coursera, Figma, Expedia, Spotify and Zillow. OpenAI also released an Apps SDK so developers can start building and testing their own apps, so I expect the number and variety of apps available to grow quickly.

This is the third attempt OpenAI has made to entice developers into building for ChatGPT. It started with plugins, we then had the GPT Store and now Apps. This now feels like the right approach, but only time will tell if this is the ‘App Store’ moment for ChatGPT.

Agent Builder

Agent Builder is a visual canvas for creating agent workflows as is much more developer and enterprise focused. I don’t expect many consumers to start building their own agents yet, but this is an important feature for enterprises and will be helpful for developers building apps for ChatGPT. To be fair, a visual canvas for building agents isn’t anything new, but this is the first time that we’ve seen this type of a product from a frontier AI company, meaning it will be much more tightly integrated with their products.

Reflecting on the two main announcements from DevDay, I suspect that in a year or so that the term ‘agent’ won’t really be used by consumers. They’ll refer to ‘apps’ in ChatGPT and the term ‘agent’ will be used by developers and enterprises to describe the backend infrastructure that power apps in ChatGPT. ‘Apps’ will be the friendly consumer language, ‘Agents’ will be the technical developer language.

Looking to the Future

As part of all the announcements there was a 1hr keynote opening DevDay and also plenty of interviews that Sam Altman and other OpenAI execs have done with the Press. These for me are always more interesting as they give you a feel for the strategy behind the releases and the longer term vision for where things are heading.

Based on everything shared around DevDay I think it’s safe to say that OpenAI’s vision for ChatGPT (and intelligence in general) is becoming much clearer. They view AI like the transistor, seeping into all technology over time, and ChatGPT like the operating system. This operating system is going to become much more personalised over time and whilst OpenAI will build ‘apps’ (features) for ChatGPT, they’re also expecting a big developer community to build them too.

This is why we saw one big consumer facing feature and one big developer facing feature announced at DevDay - OpenAI want to build better experiences for consumers in ChatGPT and simultaneously provide developers with better tools to also build better experiences for consumers in ChatGPT. Consumers win from both and I think we’ll see a rapid acceleration over the next 12 months in what people can do in/with ChatGPT and the evolution of the basic chat interface that we’ve all been waiting for. Should be an exciting ride!

More coverage of DevDay 2025

Ignorance.ai - Recap: OpenAI DevDay 2025

Stratechery - An Interview with OpenAI CEO Sam Altman About DevDay and the AI Buildout

Techcrunch - OpenAI’s Nick Turley on transforming ChatGPT into an operating system

Google DeepMind release Gemini 2.5 Computer Use

I’m starting to feel a little bit sorry for the Google DeepMind team. They did such a fantastic job in 2024 to catch up with OpenAI’s models, but they’ve hard a hard time keeping up with the sheer pace that OpenAI are shipping features and new product this year.

The release of Gemini 2.5 Computer Use this week really shines a light on the challenge they’re facing. Computer Use in this state was being previewed by both OpenAI and Anthropic earlier in the summer and I think its just bad timing that Google DeepMind have released their take on it in the same week that OpenAI have moved the game on with the release of Apps in ChatGPT and AgentKit at their DevDay.

I’m sure we’ll see the release of Gemini 3 before the end of the year, and with it a whole suite of new capabilities and features, but OpenAI are moving at such a pace right now its a little hard to see how the Google DeepMind team can keep up!

State of AI Report 2025

As has now become an annual tradition, Nathan Benaich released his State of AI Report. I covered 2024’s report on The Blueprint last year, and it’s a great look back at the last 12 months in AI and predictions looking forward over the next 12 months.

This year’s report is a 313 slide deck, but the video above is a great summary from Nathan. The report covers AI research, business impact, regulation, safety, and usage patterns of AI. There’s a good summary of the report in the report’s blog post. The report also has a great section on AI adoption in business based on a survey of 1,200 practitioners.

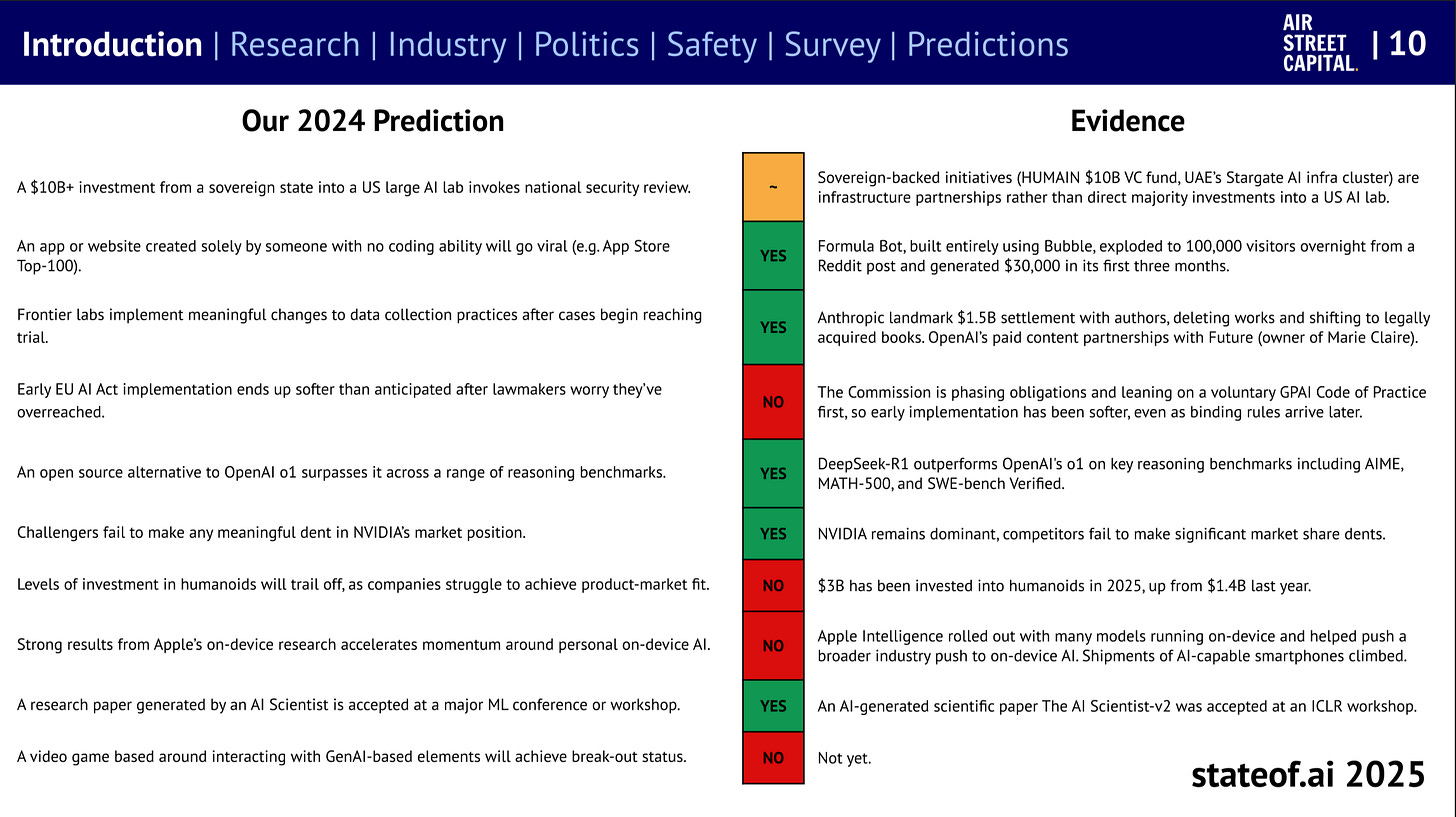

The most interesting part of the report is looking back on how their 2025 predictions fared, which you can see above. Not bad! The report also shares predictions for 2026, as outlined below:

These are some interesting predictions. I don’t agree with #1 as I don’t think we’ll see AI agent advertising spend hit $5B next year when it doesn’t even exist right now. In all honesty, I’m not really sure what it even is! However, it will be interesting to look back at all of this in next year’s report.

Introducing Figure 03

Figure introduced their third generation humanoid robot this week, and the video above is a great demo of how the robot can operate in a home, but also as a concierge, deliverer, factory worker, parcel sorter, and waiter. Figure 03 has a much wider range of movements, is more dexterous, and therefore capable of many more ‘human’ tasks.

It is a purposefully slick demo video though, but there’s a really good video essay from Time that includes an interview with Brett Adcock that covers the current shortcoming more. There’s also an article from them here.

What strikes me is that whilst we’re seeing improvements in speed of movement and general all round capabilities of humanoid robots, their movement is still very robotic. There is however a moment in the demo video around the 6:00 mark when Figure 03 makes a very human set of movements when sorting a parcel. It’s hard to describe, but it spins the parcel between its hands and then passes it off to the side - you have to see it to fully understand.

I think there’s something about seeing a humanoid robot move in a very human-like way that I find encouraging. Maybe robotic movements is what’s holding them back and when they start being able to move more like humans do then they’ll be ready for prime-time?

Web 4.0

Adobe predicts AI-assisted online shopping to grow 520% during the 2025 US holiday season

Sam Altman says there are no current plans for ads within ChatGPT Pulse — but he’s not ruling it out

Google given special status by watchdog that could force it to change UK search

AI Ethics News

Jeff Bezos Has a Plan to Curb AI’s Carbon Footprint: Send Data Centers to Space

Bank of England warns of growing risk that AI bubble could burst

Do OpenAI’s multibillion-dollar deals mean exuberance has got out of hand?

MrBeast says AI could threaten creators’ livelihoods, calling it ‘scary times’ for the industry

Long Reads

Stratechery - Sora, AI Bicycles, and Meta Disruption

Latent.Space - Developers as the Distribution Layer of AGI

Stratechery - OpenAI’s Windows Play

Simon Willison - Vibe Engineering

“The future is already here, it’s just not evenly distributed.“

William Gibson