A week in Generative AI: Instant Checkout, Sonnet 4.5 & Sora 2

News for the week ending 5th October 2025

It’s felt a bit like we’ve been gearing up for a mad week of AI news for a while, and this week certainly provided! However, with OpenAI’s annual DevDay tomorrow, I’m sure next week will be a jam-packed one too.

Deep breath….

For me the most significant news, was also the smallest - OpenAI announcing Instant Checkout in ChatGPT. It’s US only for now, but I think it’ll have the biggest long-term impact of all . Anthropic launched Claude Sonnet 4.5, but again, the smaller announcement is more significant. “Imagine with Claude” creates software on the fly and points to how we’ll create more useful software in the future. Lastly, the big headline grabber this week was Sora 2 that OpenAI launched inside its own social app and allows people to generate their likeness, and the likeness of other people, in videos.

In Web 4.0 news, there’s a report on the impact AI platforms are having on independent websites, Opera releases their AI-powered browser Neon, and Google rolled out Gemini across their smart home devices.

In Ethics news, California signed their landmark AI safety bill, OpenAI rolled out safety routing and parental controls, and there were reports that Google is blocking AI searches for Trump and dementia.

There’s also a great Long Read from Ethan Mollick about Real AI Agents and Real Work - worth a look if you’re interested in how more capable AI agents are likely to impact knowledge work.

And I couldn’t send this week’s newsletter without mentioning Tilly Norwood. If you haven’t heard about ‘her’ this week, it’s worth checking out.

OpenAI launches ChatGPT: Instant Checkout

In any other normal week, the launch of Anthropic’s “Imagine with Claude” or OpenAI’s Sora 2 would be getting the headlines. But for me, the smaller feature that OpenAI released at the beginning of the week, Instant Checkout, is far more interesting and significant.

Instant Checkout gives US users (it will expand to other markets soon) the ability to purchase products directly inside ChatGPT. This is the third pillar of Web 4.0 - “zero-click” purchasing - that I’ve been writing about a lot this year and I think will have the biggest impact over the long term.

With Instant Checkout users can now search, research, decide, and purchase a wide range of products all with the help of ChatGPT. This completely collapses the traditional purchase funnel and drastically reduces the opportunities that marketers have to influence consumer decision making. It also makes purchases even more frictionless than they currently are, which will set OpenAI up well to generated the $1tr of annual sales they aim to process by 2029.

This is also how OpenAI monetises the 90% of users who are on the free tier. They’ll take a cut of every sale processed through Instant Checkout, probably in the 2–3% range. Given the scale of their free user base and the size of the revenue opportunity, I expect them to aggressively expand and enhance Instant Checkout over the coming months. What we’re seeing now is just the start.

What’s easy to miss is that Instant Checkout is a strategic move for OpenAI to take ownership of the entire customer journey. This means that OpenAI, not the brand, holds the data, the relationship, and the insight into how and why the purchase happened. For marketers, this marks the beginning of the Web 4.0 era, where influence shifts from search and social platforms to AI platforms.

Anthropic releases Claude Sonnet 4.5

With OpenAI’s release of GPT-5 in the summer, Anthropic’s Sonnet temporarily lost its crown as the most capable coding model. But now they’re back with Sonnet 4.5 and that crown is theirs again, at least until Google DeepMind releases Gemini 3 which is likely to be announced in the coming weeks.

To illustrate how far Anthropic’s models have come, they released the video above which shows that Sonnet 4.5 can code and run a complete clone of Claude, complete with attachments and artefacts. In another example, it spent 30 hours autonomously coding a chat app, writing 11,000 lines of code. Very impressive!

But it’s not all about coding. If a model can do 30 hours of work autonomously in any domain that’s a huge step forward. Back in March, Sonnet 3.7 was completing tasks that were only 15 minutes long on average according to research by METR with a trend line that pointed towards the task length doubling every 7 months. This trend line would put AI models on track to hit the 30 hour mark sometime after 2030, so seeing that in 2025 is incredibly surprising!

Anthropic also released another new feature alongside Sonnet 4.5 - “Imagine with Claude”. This for me is more interesting and exciting - it builds software on the fly to solve any problem you throw at it. It’s essentially software building itself based on what the user needs.

This is a big shift - we’ve been designing software/digital products for years based on what we think users want and all the features are pre-determined. This completely flips this on its head and generates features in the moment based on the context of what the user is trying to do. I think this is how we get to more sophisticated, flexible, and visual chat interfaces for the models themselves as well as creating more useful software for users in the future. Its's worth paying attention to how this whole area progresses over the coming years.

OpenAI releases Sora 2 with new social app

The launch of Sora 2, in the US only to start with, was always going to be a big deal, and was always going to raise questions. Coming less than 12 months after the first model, Sora 2 is higher quality, more realistic, and better at following instructions. With that comes greater potential for deepfakes and generated videos that are indistinguishable from reality at first glance.

These issues would have surfaced simply because Sora 2 is a more capable video model. But OpenAI went further. They wrapped the experience in a social-like mobile app that lets people generate their own likeness and lets others generate it too. It also raises copyright concerns, with the model able to reproduce popular IP, echoing their earlier approach with image generation.

And I think this is the point. OpenAI are deliberately releasing technology that challenges our assumptions about what’s acceptable for it to do. Their strategy seems to be to push past the boundaries of public comfort, observe the reaction, and only then decide whether to dial things back.

On one hand, I admire this. Technology should challenge our assumptions. But if recent history tells us anything, it’s that we readily adopt technology with unintended consequences that are almost impossible to reverse. With AI moving this fast, there’s no time to pause, reflect, or weigh the societal trade-offs. The problem is that AI is now asking questions we no longer have time to answer.

If you want to dig into this more deeply here’s some more coverage and commentary around Sora 2’s launch:

The First 24 Hours of Sora 2 Chaos: Copyright Violations, Sam Altman Shoplifting, and More

OpenAI launch of video app Sora plagued by violent and racist images: ‘The guardrails are not real’

Sam Altman says Sora will add ‘granular,’ opt-in copyright controls

OpenAI’s new social video app will let you deepfake your friends

Reddit stock falls for second day as references to its content in ChatGPT responses plummet

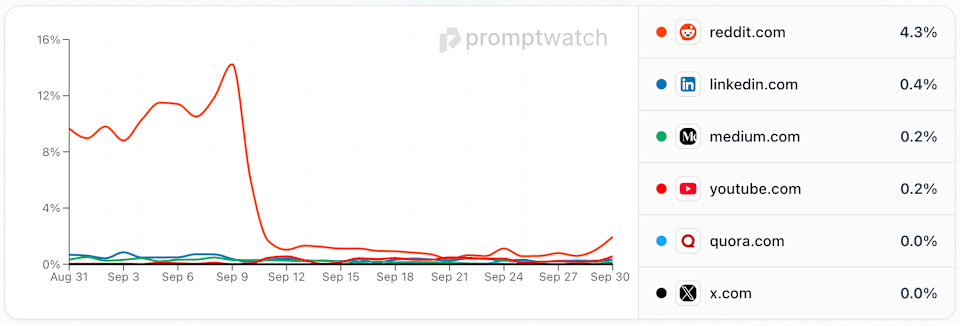

Reddit had a bad week this week with its stock falling by 15% following reports that it was no longer featuring as prominently in ChatGPT’s responses. The data the report was based on is here.

It looks like OpenAI made a big change to their citations around the 9th Sept and some of the most prominent data sources are now more inline with the other data sources. For example, Reddit used to make up c.12% of social data sources, but since the changes has been tracking at around 4%. Similarly, Wikipedia used to make up c.7% of knowledge base data sources, but since the changes has been tracking around 3%. Both Reddit and Wikipedia are both still top data sources, they’re just not as prominent as they used to be.

My initial gut feel is that OpenAI have realised that there were some data sources (Reddit, Wikipedia etc.) that were just too prominent in their citations and what they did around Sept 9th is a correction to get them more inline with other data sources so that ChatGPT’s responses are more balanced in terms of where they get their data from.

Seems sensible, but significant changes like this are having a real impact on how marketers are thinking about their strategies around AI platforms and so have unintended downstream effects. Hopefully things like this will settle down as this whole space matures.

Web 4.0

Google is destroying independent websites, and one sees no choice but to defend it anyway

Opera releases Neon, its AI-powered browser with a built-in agent

Perplexity’s Comet AI browser now free; Max users get new ‘background assistant’

PayPal’s Honey to integrate with ChatGPT and other AIs for shopping assistance

AI Ethics News

California Governor Newsom signs landmark AI safety bill SB 53

OpenAI rolls out safety routing system, parental controls on ChatGPT

Ex-OpenAI researcher dissects one of ChatGPT’s delusional spirals

Meta plans to sell targeted ads based on data in your AI chats

OpenAI is the world’s most valuable private company after private stock sale

Tilly Norwood: how scared should we be of the viral AI ‘actor’?

DeepSeek releases ‘sparse attention’ model that cuts API costs in half

Long Reads

One Useful Thing - Real AI Agents and Real Work

Simon Willison - Claude Sonnet 4.5 is probably the “best coding model in the world” (at least for now)

Tim Berners-Lee - Why I gave the World Wide Web away for free

“The future is already here, it’s just not evenly distributed.“

William Gibson