A week in Generative AI: 12 Days, Nova & World Models

News for the week ending 8th December 2024

Wow, what a week! It’s been a quiet last four weeks or so with the US election and thanksgiving, but now we’re through these events it looks like all the big AI firms are trying to get a lot of great announcements out of the door before the end of the year.

We have the 12 days of OpenAI (we’re two days in so far), unexpected new foundation models from Amazon, a new version of Meta’s Llama as well as interesting new ‘world models’ from both Google DeepMind and World Labs. So this week’s newsletter is a bit of a bumper edition!

On the ethics front, there are worrying signs that OpenAI’s new o1 model tries to deceive humans, Meta is joining the nuclear-powered data centre bandwagon, and the LA Times is planning on introducing a ‘bias meter’ on their editorial content. There are also some great long reads from Stratechery and the Wall Street Journal that are well worth checking out.

12 Days of OpenAI

On Wednesday, Sam Altman announced that OpenAI would be hosting a livestream each weekday for the next 12 days where they would be launching or demoing something new. So far we’ve had the following:

Day One: o1 and ChatGPT Pro

Day Two: Reinforcement Fine-Tuning

o1 was an expected announcement, since o1 has been in preview for nearly 3 months now. o1’s full release doesn’t seem to move on the capabilities of the model that much and mostly represents speed improvements, ability to work with attachments, and other refinements. o1 is actually a small step backwards in some areas as it has been refined. You can find my initial thoughts on o1 here, which all still ring true and I think we’ll really see o1 come into its own when it is powering a more agentic experience next year.

ChatGPT Pro is a new $200 tier subscription model that currently only gives subscribers access to an o1 pro mode, which is only marginally better in the benchmarks that o1. o1 pro mode has been positioned as the best model for ‘really hard problems’. It can ‘think harder for the hardest problems’ and isn’t for most people, which will be best-served by the free tier or the plus tier. I suspect there is more to come for ChatGPT Pro subscribers in the next 10 days of announcements that make the $200 price point a little easier to swallow. One of those things is likely to be early-access to ChatGPT-4.5 which is rumoured to be a part of the upcoming announcements along with a publicly available version of Sora.

Reinforcement Fine-Tuning was more of a technical release and is only in limited alpha at the moment for researchers, universities and enterprises with complex tasks. Reinforcement Fine-Tuning is a new way to customise OpenAI’s models that allows developers to create expert models that are specialised for specific tasks with as few as 12 examples, as opposed to the 000s of examples that are usually required for regular fine-tuning. This reduces the costs of fine-tuning, opens up new use cases where data is limited, and speeds up the fine-tuning process. Fine-tuning has always been a more complex and technical undertaking, so it will be interesting to see what use cases this opens up.

So, we have five more announcements/demos due next week and then five more the week after. I’m looking forward to seeing what else OpenAI has in store for us and will cover all of next week’s announcements in next week’s newsletter.

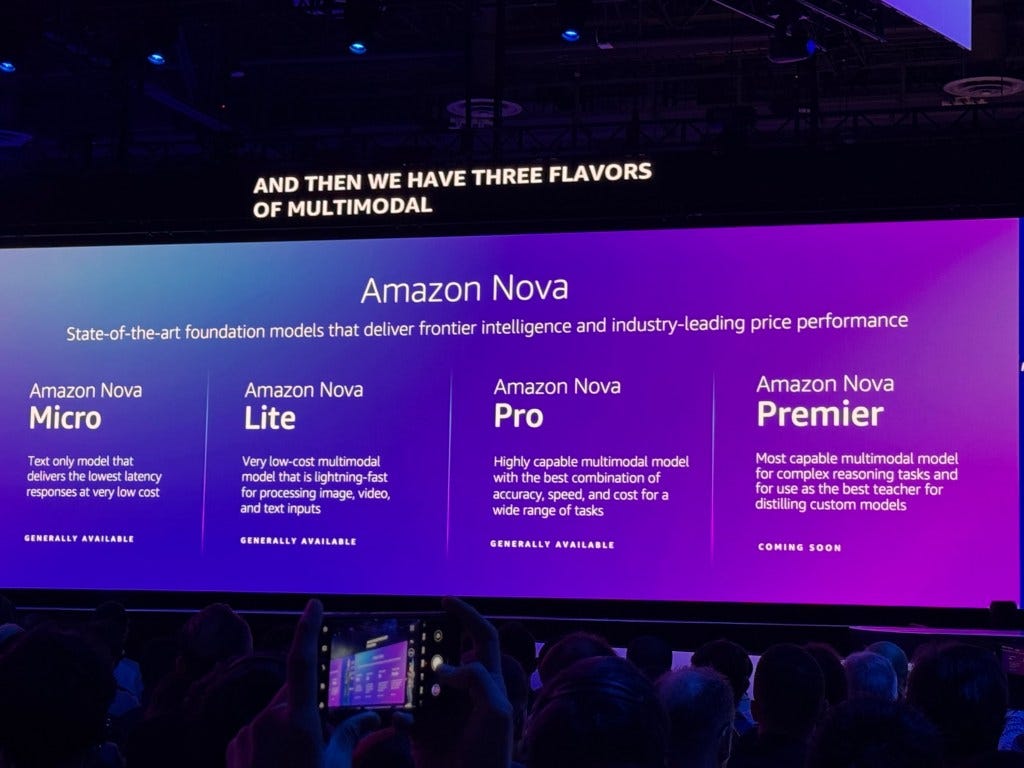

Amazon announces Nova, a new family of multimodal AI models

Amazon had their re:Invent conference this week and the big headline announcement was the unexpected release of Nova, a family of multimodal foundation models that are looking to compete with OpenAI, Anthropic, and Google’s models. All models were immediately available with the exception of the Premier model which is due in early 2025. There are also Canvas and Reel versions which generate images and video respectively.

This was all quite unexpected as Amazon have invested over $8bn in Anthropic over the last 12 months and had previously failed to get their own foundation models off the ground. However it seems that Amazon were just heading their bets with their investment in Anthropic.

Based on the published benchmarks, and some initial testing, I would peg Nova alongside the Llama family of models, which I think are about 6 months behind the frontier which currently consist of ChatGPT 4o, o1, Claude 3.5 v2 (we really need better naming conventions!), and the latest Gemini 1.5 Pro model. The Nova models also claim to be the fastest and most cost-effective models in their respective intelligence classes, which seems to hold true based on the models’ pricing and benchmarks.

This is a decent first showing from Amazon, and it will be interesting to see if they can catch-up with the frontier models next year.

Meta unveils a new, more efficient Llama model

Not to be left behind by all the new model announcements this week, Meta announced Llama 3.3 70B, a new addition to the third generation of Llama models that delivers the same performance of Meta’s largest model, Llama 3.1 405B, at a lower cost.

The new model was announced by Ahmad Al-Dahle, VP of generative AI at Meta, and because it is a smaller model, it is much easier and cost-efficient for developers to run. The Big AI companies are getting really good at rapidly decreasing the size and cost of models whilst maintaining 90% of the performance, and I expect this trend to continue. There are huge benefits to this approach, rapidly decreasing the cost of ‘intelligence’ and ultimately resulting in incredibly powerful models that can run natively on devices like mobile phones without requiring an internet connection. This will lead to lots of new consumer-facing use cases, and I’m really looking forward to seeing where this direction leads us!

DeepMind’s Genie 2 can generate interactive worlds that look like video games

The original Genie model, released in March, was limited to generating interactive side-scrolling platform like games. Genie 2 takes this idea further and allows the generation of rich, playable 3D worlds from a single image and a text prompt. The model is able to simulate object interactions, animations, lighting, physics, reflections, and the behaviour of NPCs (non-playable characters).

DeepMind say that Genie 2 can generate consistent worlds with different player perspectives like first-person and isometric views, allowing users (players?!) to interact for up to a minute. Genie 2 represents a new type of model, often referred to as ‘world models’ that can simulate games and 3D environments. There are still issues to overcome like inconsistencies and hallucinations as well as the overall fidelity of the output.

The end game is to be able to simulate high fidelity, realistic ‘real world’ environments which is gearing up to be a big area of investment and interest over the coming years. There are two big benefits to ‘world models’ - they will allow AI models to understand the real world, which could be the final unlock that’s needed for artificial general intelligence. The models will also help with the simulated training needed for robotics. Both of these use cases are big ambitious goals for the AI industry, so I expect lots more updates on these approaches next year.

Fei-Fei Li’s World Labs generate 3D environments from a single picture

Just like buses, Fei-Fei Li’s World Labs also came out this week with their take on generating 3D environments from a single image. Much like DeepMind’s Genie 2, the model can generate interactive 3D environments that users can navigate around.

It’s very similar to Genie 2, but World Labs claims that this is a first step towards creating something they call spatial intelligence which is what allows humans to understand and interact with the physical world, visualise things in our ‘mind’s eye’, and use spatial reasoning and spatial thinking to move through the world and create new physical objects.

World Labs aims to develop AI systems than can match human spatial intelligence so that they can understand and interact with the physical world in the same way humans do.

Google says its new AI models can identify emotions

On Thursday Google announced a new family of models called PailGemma 2 which can analyse images, generate captions, and ‘identify’ the emotions of people in the images.

This has caused some controversy as the detection of emotions is very prone to cultural bias dependent on the training data. This is because there are major differences in the way people from different backgrounds visually express how they’re feeling. Google says that they have conducted ‘extensive testing’ to evaluate demographic biases in their models, but many commentators remain unconvinced.

Despite the potential bias in the training data and models, I think that models being able to reliably and accurately detect emotions could be of huge benefit. There’s obviously a lot more work to be done to ensure that the training data is diverse, balanced, and unbiased and that any model is robustly evaluated and safe to use. But if these challenges could be overcome an emotionally-intelligent AI model could have a huge number of interesting use cases.

AI Ethics News

Meta says AI content made up less than 1% of election-related misinformation on its apps

Revealed: bias found in AI system used to detect UK benefits fraud

Billionaire Owner of LA Times Plans to Use an AI-Powered ‘Bias Meter’ on Editorials

Music sector workers to lose nearly a quarter of income to AI in next four years, global study finds

Google DeepMind predicts weather more accurately than leading system

Ads might be coming to ChatGPT — despite Sam Altman not being a fan

Long Reads

Stratechery - The Gen AI Bridge to the Future

The Wall Street Journal - Googling Is for Old People. That’s a Problem for Google

MIT Technology Review - These AI Minecraft characters did weirdly human stuff all on their own

Techcrunch - The abject weirdness of AI ads

“The future is already here, it’s just not evenly distributed.“

William Gibson