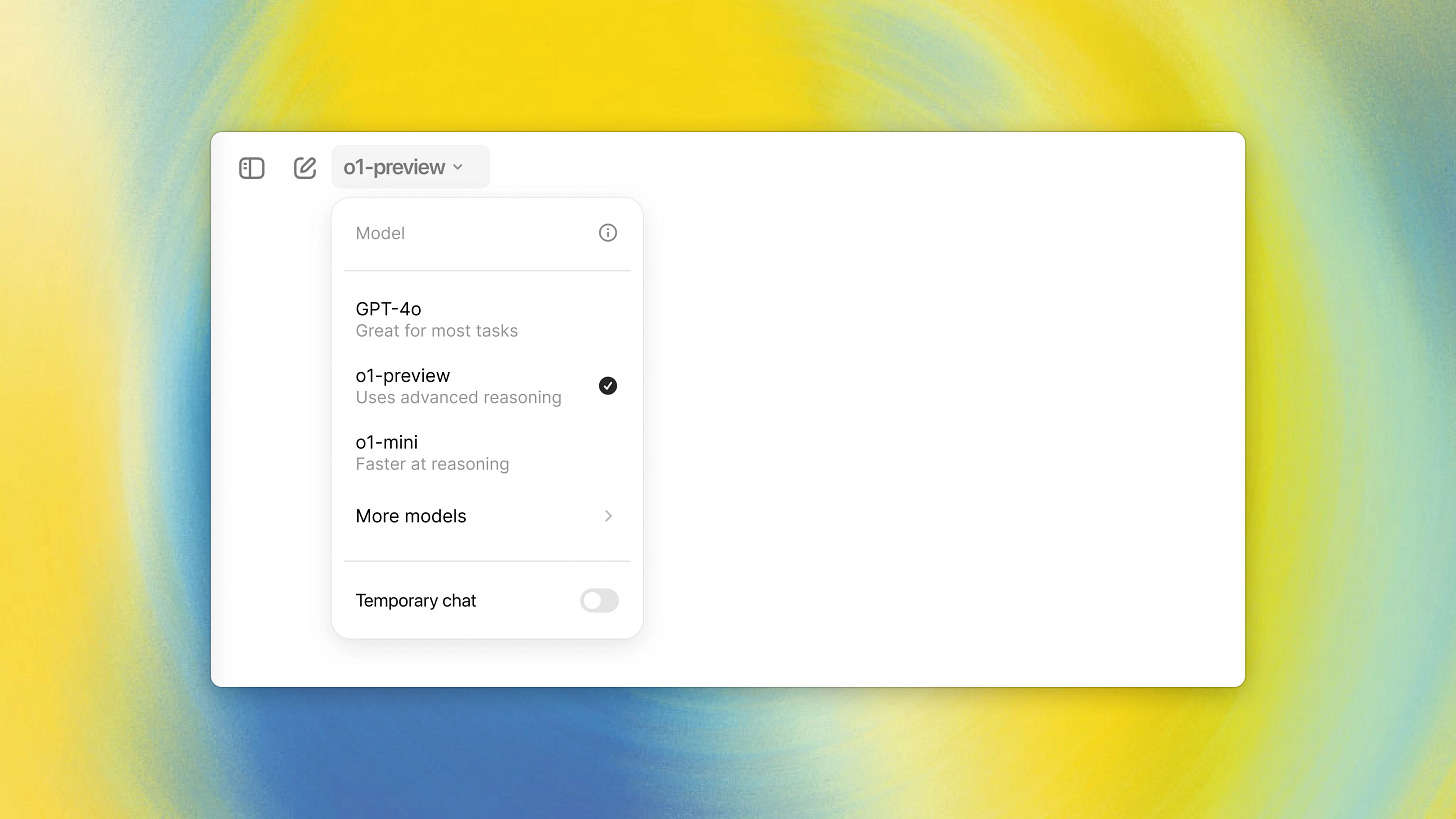

In a move that caught many by surprise, OpenAI recently unveiled o1, a preview of their latest large language model. This isn't just another incremental improvement - o1 represents a significant leap forward in generative AI capabilities, particularly in reasoning and problem-solving. As we've been exploring in the Beyond Chatbots series, the evolution of our current generation of chatbots is accelerating towards more sophisticated digital companions. OpenAI’s preview release of o1 is a major milestone on this journey, demonstrating new capabilities that bring us closer to artificial intelligence in its truest sense.

The Revolutionary 'Thinking' Ability of o1

What sets OpenAI’s o1 model apart from its predecessors is its ability to 'think' before answering. Unlike all large language models that came before it, o1 analyses the complexity of each query and adjusts its ‘thinking’ accordingly. This means that for a simple question, o1 might respond almost instantly. But for a complex coding problem or an intricate mathematical proof, it could spend several minutes deliberating before providing an answer.

This variable response time mirrors human thinking processes more closely than ever before. When we encounter a difficult problem, we don't always immediately blurt out an answer—we can take our time to consider, analyse, and plan our approach. o1 does the same, creating a plan to tackle the query and then executing that plan step by step.

Understanding o1's 'Thinking' Mechanism

The 'thinking' process of o1 is rooted in research outlined in OpenAI's "Let's Verify" paper, published in May 2023. At its core, o1's approach involves generating multiple potential answers and then selecting the best one - a kind of brute force approach to intelligence. While this might seem simplistic, it's not dissimilar to how humans often tackle problems - iterating and trying out different solutions until we find the one that works. The real craft is in determining which solution is the best to present to the user.

The approach OpenAI have developed allows o1 to break down complex tasks, show its thinking process, justify its approach, and provide comprehensive answers. It's a significant step towards more transparent and explainable AI, addressing one of the key concerns in current generative AI developments.

o1's 'thinking' capabilities are the result of a novel approach to training large language models, leveraging synthetic data and focusing on chain-of-thought reasoning. OpenAI developed a large-scale reinforcement learning algorithm to teach o1 to think productively. This algorithm used a 1.5B token dataset called MathMix, which included math problems, solutions, free-form discussions of math concepts, and synthetic data. Crucially, the reinforcement learning algorithm taught o1 to refine its thinking process, try different strategies, and recognise its own mistakes. This approach allowed o1 to learn how to break down complex tasks, reason through multiple steps, and correct errors in its chain of thought. Importantly, o1's performance consistently improved with both increased reinforcement learning during training and more time spent 'thinking' during testing. This marks a significant departure from previous models that treated all queries uniformly, regardless of complexity.

But perhaps the most interesting element of o1 is the potential for ongoing improvement through what's known as the 'data flywheel' effect. As more people use o1, OpenAI gains access to more real-world data on complex reasoning processes. This data can then be used to train future iterations of the model, creating a virtuous cycle of improvement. It's a key reason why OpenAI has released o1 in preview form as real-world interaction is crucial for refining and enhancing the model's capabilities in the future.

Limitations and Challenges

Whilst an impressive technical achievement, o1 is not without its limitations. It's important to understand that while o1 can solve more complex problems using its existing knowledge, it's not yet capable of true reasoning from first principles or discovering entirely novel solutions to existing problems. These capabilities would represent another significant leap forward in AI development.

Moreover, in its current preview form, o1 can be somewhat 'brittle'. It occasionally produces non-sensical text and can get simple things wrong that most people would easily get right.

In my testing of o1 for complex coding tasks, I've found its performance to be really impressive. It's almost too good! The model is great at breaking down complex tasks, clearly showing its thinking process, and providing detailed justifications for its approach. The comprehensiveness of its answers is incredibly impressive but could potential be quite challenging for users as well.

o1's responses can be overwhelmingly detailed and lengthy, which is a big shift from the quick, concise responses we've grown accustomed to with the current generation of chatbots. It will require quite a mental adjustment for users to get used to this, but the depth and quality of the content generated by o1 can be invaluable for complex problem-solving tasks.

o1's Role in OpenAI's Future

While OpenAI's o1 is impressive in its own right, it will really shine when it's integrated into OpenAI's next frontier model. AS with SearchGPT, OpenAI is choosing to preview capabilities and features of its next model as their own standalone models to get user feedback, generate usage data for future finetuning, and most importantly get users comfortable with some of the advanced capabilities that will be coming over the coming months and years.

Of all the capabilities and features that OpenAI have started to preview, o1 is by far the most impressive and significant in what it represents for the future of AI. The reasoning and planning capabilities demonstrated by o1 show glimpses of one of the core building blocks needed to evolve our current generation of chatbots into truly capable digital companions, a central theme in my Beyond Chatbots series. o1's capabilities will be vital for digital companions to be able to take on more complex, multi-step tasks with less human intervention.

These advancements represent a significant shift in how we will interact with technology going forwards. As chatbots become more capable of complex reasoning and independent problem-solving, people will need to adapt to being less 'in-the-loop' and involved in all the details of some problem-solving processes.

This transition will raise important questions about trust and transparency in AI systems. As we rely more heavily on generative AI for complex tasks, it becomes very important that we can understand and verify the reasoning behind AI-generated solutions and actions. o1's ability to show its 'thinking' process is a step in the right direction, but building and maintaining trust in AI systems will be an ongoing challenge as capabilities continue to advance.

Conclusion

OpenAI's o1 represents a big leap forward in the reasoning capabilities of large language models. While it's not yet the holy grail of artificial general intelligence, it's a crucial stepping stone on that path. The ability to 'think' before answering, to adjust processing based on task complexity, and to show its work in solving problems are all major advancements that will have a big impact on the future development of large language models.

As users begin to interact with o1 and other future AI models, they will need to make significant mental adjustments. The shift from quick, concise responses to more elaborate, thought-out answers will require a new approach to interacting with large language models. Users will need to adapt to more detailed and comprehensive outputs, and a potentially less collaborative problem-solving process. This change in user experience is not a one-time adjustment but likely the beginning of a trend. As generative AI technology continues to advance at a rapid pace, we may find ourselves needing to adapt to new capabilities and interaction paradigms on a regular basis.

The continual, ongoing evolution of AI capabilities will demand a level of flexibility and openness to change that may be uncomfortable for some users. However, they also open up exciting possibilities for more sophisticated problem-solving and assistance across various domains. To get the most from the technology over the coming years, it will be essential for us to remain engaged, adaptable, and critical in our approach. By doing so, we can help shape a future where digital companions can truly enhance our capabilities and positively contribute to our society.

If you want to find out more about o1, I’ve listed some resources below that are worth exploring:

OpenAI’s introduction to o1-preview (including some great demo videos)

OpenAI’s article on Learning to Reason with LLMs

OpenAI’s overview of o1-mini

o1’s system card

AI Explained’s first impressions of o1

Ethan Mollick on OpenAI's "Strawberry" and Reasoning

“The future is already here, it’s just not evenly distributed.“

William Gibson