OpenAI hosted their first DevDay today which was also live streamed. There were a lot of announcements made, some for developers, some for ChatGPT users but absolutely all of them move the generative AI game on again and will have a big impact on how we use the technology over the coming weeks and months. Below is a special newsletter summarising the announcements made with my initial thoughts.

GPTs

By far and away the most significant announcement from OpenAI was GPTs, OpenAI’s first step into generative AI agents that can perform tasks for you. So here’s a quick run down of what they are and what they can do:

What GPTs are

GPTs are customised versions of ChatGPT that anyone can create for a specific purpose.

People can create a GPT using ChatGPT - you don’t need to be able to code or be technical, ChatGPT will lead you in a Q&A to create them, even creating the avatar using DALL-E!

You can add files to a GPT for it to learn from, enabling you to train it on specific knowledge.

You can also set a GPT up to use Web Browsing, DALL-E and Code Interpreter.

GPTs can be shared publicly, shared privately with a link, restricted to just your enterprise (if you’re an enterprise customer) or kept completely private to just you.

There will be a GPT store launched later this month where the revenue generated by GPTs will be shared with their creators (more details TBC).

There are also more sophisticated capabilities that developers can add to their GPTs through something called custom actions. Custom actions allow GPTs to integrate external data or connect to APIs, allowing them to interact with the real-world.

What GPTs can do

The simple answer is almost anything that involves an API and/or data, so essentially anything that can be done online.

There were some good demos shared in the DevDay live stream:

Code.org lesson planner - this is a GPT that helps Code.org to create new, engaging coding lessons based on Code.org’s internal knowledge

Canva GPT - this is a GPT that generates graphic design images, such as posters and then allows a user to continue to work on them in the Canva for the fun design experience

Zapier GPT - This was the big one they demoed. This GPT can perform actions over the 6,000 API integrations that Zapier has, meaning the possibilities are endless. In the demo they showed Zapier GPT interacting with a user’s calendar, identifying scheduling conflicts and then messaging attendees directly from the GPT.

I highly encourage everyone to watch the DevDay livestream segment from 26:30 where Sam Altman creates a GPT from scratch live. It really shows you how simple it is and some of the core capabilities.

Opinion

I can’t overstate how game-changing I think GPTs are going to be. As Sam Altman said when announcing them, this is just their first small step towards a future of generative AI agents and already I can see how much promise they hold.

It’s as impossible now to envisage all the different GPTs that will be built as it was back in July 2008 to envisage all the Apps that would be built when the App Store was launched by Apple. Back then the App Store launched with just 500 apps, now 15 years later there are c.1.6m.

Enough said.

You can find out more about OpenAI’s new GPTs here.

General Updates

Along side GPTs being announced, there were many other user-facing announcements made for ChatGPT:

ChatGPT is getting a visual overhaul with a simpler, more intuitive user interface.

For ChatGPT Plus users, they will now default to using the new GPT-4 Turbo model, which includes some impressive enhancements:

Knowledge is now up to April 2023, instead of January 2022.

Context length is now up to 128k tokens, up from 8k, which means you can include content that’s up to 300 pages of a standard book in length when asking a question 🤯.

The model picker is gone! ChatGPT will just know what you want to use (browsing, plugins, code interpreter,, DALL-E) and when to use it.

Opinion

These are some nice little updates, and I know that this was specifically a developer conference, but I was hoping for a few more updates for general users to your basic ChatGPT functionality. There are still some major user features missing like being able to search your chat history, pin chats and information and even being able to just update your email address. I was also secretly hoping that you’d be able to connect your other digital accounts to ChatGPT for it to be generally more knowledgeable about you and useful. I guess this is partly being delivered via GPTs, if you want to build your own personal GPT, so I can’t complain. However, I think ChatGPT will need to move the user interface game on in the coming months when it starts to see more competition from Google when they release their Gemini model.

Developer Updates

Unsurprisingly there is a lot to unpack for developers from the DevDay livestream. I’ve outlined all the main announcements below:

JSON Mode - this ensures that the model will respond with valid JSON, making calling APIs much easier.

Reproducible Outputs - you can pass a seed parameter to the API and it will make the model return repeatable outputs, giving you a much higher degree of control over model behaviour.

Log prompts - you can view the prompts your users have used via the API in the developer portal.

World Knowledge - you’ll now easily be able to bring in your own data and documents to give your API based apps more specific domain knowledge.

More modalities - DALLE-3, GPT-4 Turbo with Vision and Text-To-Speech are now all available via the API.

Images as inputs - GPT-4 can now accept images as inputs via the API.

Whisper v3 - the new version will be coming to the API soon.

Fine-tuning - Fine-tuning was announced for GPT-3.5 Turbo 16k and a GPT-4 fine-tuning experimental access program has been opened.

Custom models - This is a new, expensive program, but allows companies to work with OpenAI consultants/developers to fine-tune their own models.

Rate limits - rate limits for the API are being doubled and it will be easier to request further increases beyond that.

Copyright Shield - similar to previous announcements from Google et al, OpenAI will take the copyright liability for you and pay any costs incurred.

Pricing - GPT-4 Turbo API prices will be c.2.75x cheaper than GPT-4 API prices. GPT 3.5 Turbo 16k API prices are also decreasing.

Speed - GPT-4 Turbo will become significantly faster over the coming months.

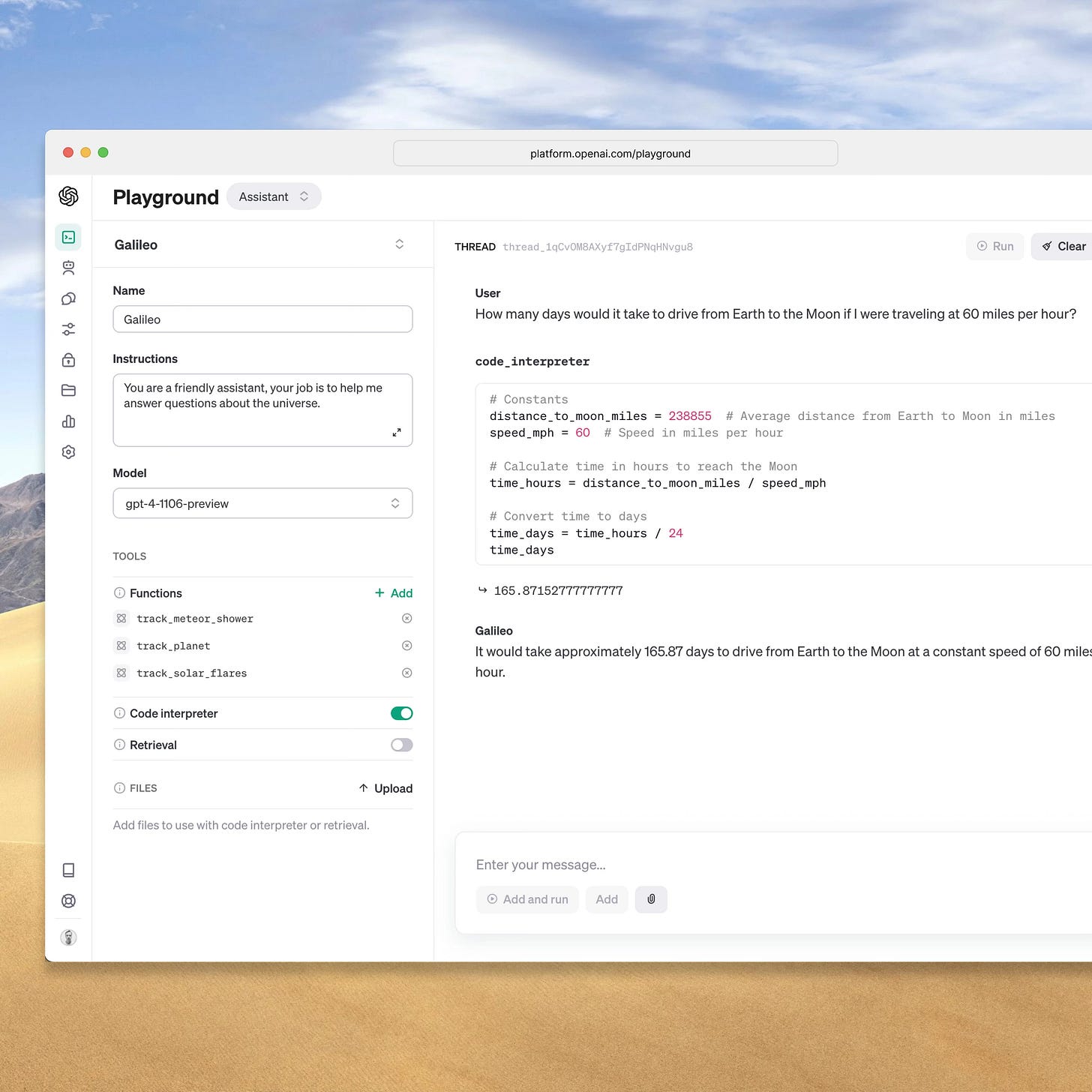

Assistants API - this is a biggie with lots of capabilities in the API which will make developing generative AI user experiences soooo much easier with OpenAI’s models:

Threading - the API will keep track of all user conversations for you.

Retrieval - the API will take care of all the pain with building Retrieval Augmented Generation features for you.

Code Interpreter - finally a version available via API!

Function Calling - all the improvements with JSON Mode and Reproducible Outputs as mentioned above.

There was a great demo of many of these capabilities that can be seen from 33:30 in the DevDay livestream. There was even a nice little touch at the end where they got a GPT to give everyone in attendance at the conference $500 of OpenAI credits on their account!

Opinion

These announcements have delivered against many of the major requests that OpenAI has been getting from developers and gives the developer community a lot of new toys to play with. I most excited about the Assistants API and taking the pain away from building RAG features - this has been a really technical area to date and having the API take care of all of that for you is a huge game changer.

There is still a lot to digest and reflect on following the DevDay livestream and it’s going to be great to not only see the reactions from the industry but also how people start using all these new features and start building GPTs. We’ve got a fun and exciting few weeks ahead of us seeing this all unfold!

“The future is already here, it’s just not evenly distributed.“

William Gibson