An Introduction to Artificial Intelligence: Part 1

What it is, what Generative AI is, the emergence of ChatGPT and why it's taken the world by storm.

What is Artificial Intelligence?

Hi, and welcome to the first post in our Classroom series that covers an Introduction to Artificial Intelligence.

Artificial Intelligence is a term that has been around since the mid 50s. It was defined as a field of computer science that's focused on creating technology that can perform tasks associated with human intelligence, such as learning, reasoning, and decision making.

“A field of computer science focused on creating technology that can perform tasks associated with human intelligence, such as learning, reasoning and decision making”

This term was coined by a team of researchers in 1956 that built on previous work that was done by Alan Turing.

75 years of AI Development

AI development started back in 1947 when Alan Turing gave the first public lecture to mention computer intelligence, but the term artificial intelligence wasn't coined until 1956. As you can see in the timeline above, there was quite a lot of work done through to the mid 60s.

Whilst there was ongoing work in AI throughout the 70s, 80s, 90s and early 2000s it wasn't until 2011 when IBM Watson was released and appeared on the American TV show Jeopardy against some of the champions that AI re-entered the public imagination. Watson won first place on Jeopardy winning a prize of one million dollars and grabbed lots of headlines in doing so.

Since then there has been a rapid amount of development in AI and over the last 12 years there has been a lot of progress. Some interesting milestones have been:

📍 In 2017 Google released a technology called the transformer architecture, and that was absolutely revolutionary. It was a pivotal piece of technology and underpins all the advancements in Generative AI that we see today.

📍 In June 2018 OpenAI released GPT-1, the first of a kind foundational model. It had 117 million parameters, which compared to today's sizes is incredibly small, but already showed a lot of promise.

📍 In October 2018 Google released a similar foundational model called BERT, which was a similar size to GPT-1.

📍 In February 2019 GPT-2 was released which had an order of magnitude larger number of parameters at 1. 5 billion.

📍 In May 2020 there was another big leap in the size with the release of GPT-3 which had 175 billion parameters.

📍 In November 2022 ChatGPT was released by OpenAI based on GPT-3.5 which had been fine-tuned for chat with ‘reinforcement learning with human feedback (RLHF).

Main Branches of AI

There are six main branches of Artificial Intelligence:

These different branches are really split into two main areas, the ones that deal with structured data and the ones that deal with unstructured data.

Structured Data

Structured data is data that is in a format that is very easy to read by computers such as tables.

Unstructured Data

Unstructured data is where a lot of progress has been made over the last 10 years. Unstructured data is things like long passages of text, videos or images. It’s data that doesn’t have an inherent structure to it that computers find hard to understand.

The domains of AI that deal with unstructured data have now got to the point where it can deal with it in a very, very sophisticated way. This progress underpins the advances we’re seen in Generative AI.

What Is Generative AI?

“Generative ai refers to a category of artificial intelligence (ai) algorithms that generate new outputs based on the data they have been trained on. Unlike traditional ai systems that are designed to recognise patterns and make predictions, generative ai creates new content in the form of images, text, audio, and more.”

I think it says a lot that the official definition above from the World Economic Forum was only defined in February 2023. Generative AI is, for the first time a computer system has been built that is able to generate content on its own, based on what it's been trained on.

Having said that though, it's important that we put the term artificial intelligence into context for what it actually is, because if you go back to that definition from the 1950s, it's a very, very broad definition.

Applied Statistics

So what really is Artificial Intelligence in its current form? Well Ted Chiang, the acclaimed author had a fantastic response when asked about this by the FT. When asked what he would call Artificial Intelligence if he had to invent a different term for it his answer was instant: Applied Statistics.

This term is really important to keep front of mind when thinking about Generative AI. None of the platforms or models are conscious, they don’t have intelligence per se, they’re just really really good at maths.

Generative AI leverages two main branches of artificial intelligence that we covered earlier:

Neural Networks

Neural networks are a technology that is designed to work like the human brain, and is made up of millions of artificial neuron’s that are all connected to each other.

Natural Language Processing

Natural language processing is a technology that helps computers understand human language. It enables people to interact with computers and digital devices in a more natural and intuitive way. You will likely have come across a lot of this technology over the last five years or so with devices like Alexa, Siri and other voice assistants.

Terminology

New technologies bring new terms that need to be understood so we can truly understand how the technology works. The four terms that are important to understand with Generative AI are Large Language Model, Tokens, Parameters and Dimensions:

Large Language Model

A Large Language Model (LLM) is a type of machine learning model that is trained on a vast amount of text data. It uses this training to generate human-like text based on the input it receives. Examples of LLMs include GPT-4 from OpenAI, Bard AI from Google and LLaMa from Meta. They can perform a variety of natural language processing tasks such as translation, summarisation, answering questions, and more.

Tokens

Tokens can be thought of as pieces of words. And for some bizarre reason, a token is about four characters in English which is on average about three quarters of a word. GPT 4, the latest model from OpenAI, can ingest a prompt up to 32,000 tokens long, which is approximately the length of the book Animal Farm. A huge amount of content can be ingested in a prompt when you are asking questions of a Generative AI model.

Parameters

Parameters are talked about a lot in the Generative AI space, and they're more like a model's knowledge or memory from all the data that its been trained on. GPT 3 has 175 billion parameters, which is like someone having read a whole library of books and being able to remember absolutely everything. These models have a huge amount of ‘memory’!

Dimensions

Dimensions are like different aspects, features, or meanings of a word. So in the above example, if you took the word ‘intelligence’, it has similarities to concepts such as ‘problem-solving’, ‘understanding’, ‘knowledge’ and ‘learning’. Dimensions allows a large language model to understand how close a word is to other words or concepts.

Dimensions are represented by what's called a vector of numbers. GPT-3 uses 768 dimensions, which are adjusted by the model during training to help it accurately predict the next word in a sentence.

How Generative AI Works

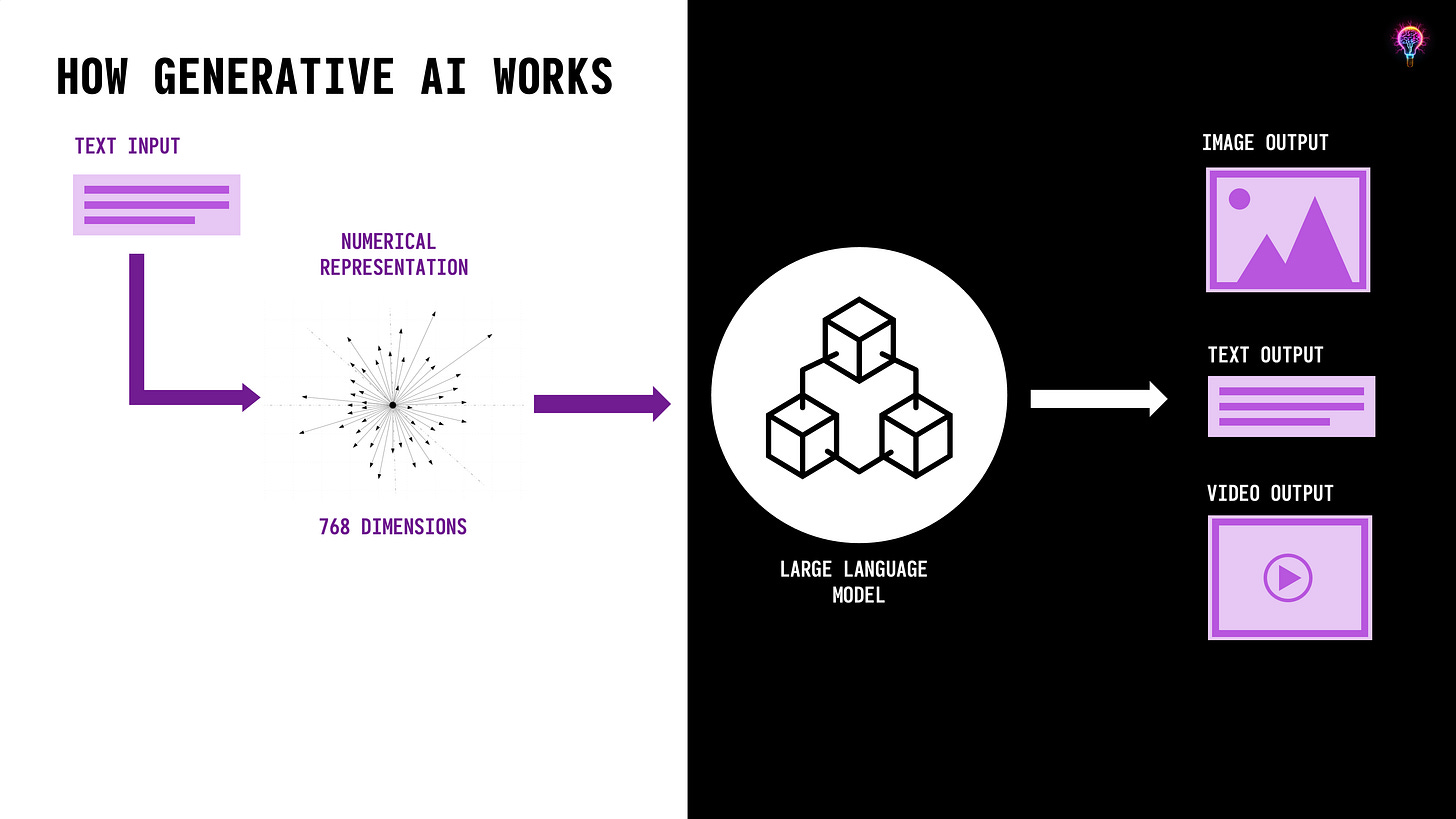

At a very basic level the above diagram is how generative AI works. In this example it takes a text input and it turns that text into a numerical representation, in the example of GPT-3 a vector of 768 dimensions. The generative AI platform then feeds that numerical vector into the large language model.

The large language model will then output an image or some text or video or any other type of content that is being built to output. The way that it does this is iterative. The way a large language model outputs text and the way that it outputs images is slightly different, but the broad principles are the same.

In a text-based large language models it just predict what it thinks the next best word should be in a sentence, based on the context of the prompt that it’s been given. So, in the example above, if you gave the model the word ‘there’, it will then predict that the next likely word is ‘are’, the next likely phrase after that is ‘lots of’, and then after that it predicts ‘reasons’.

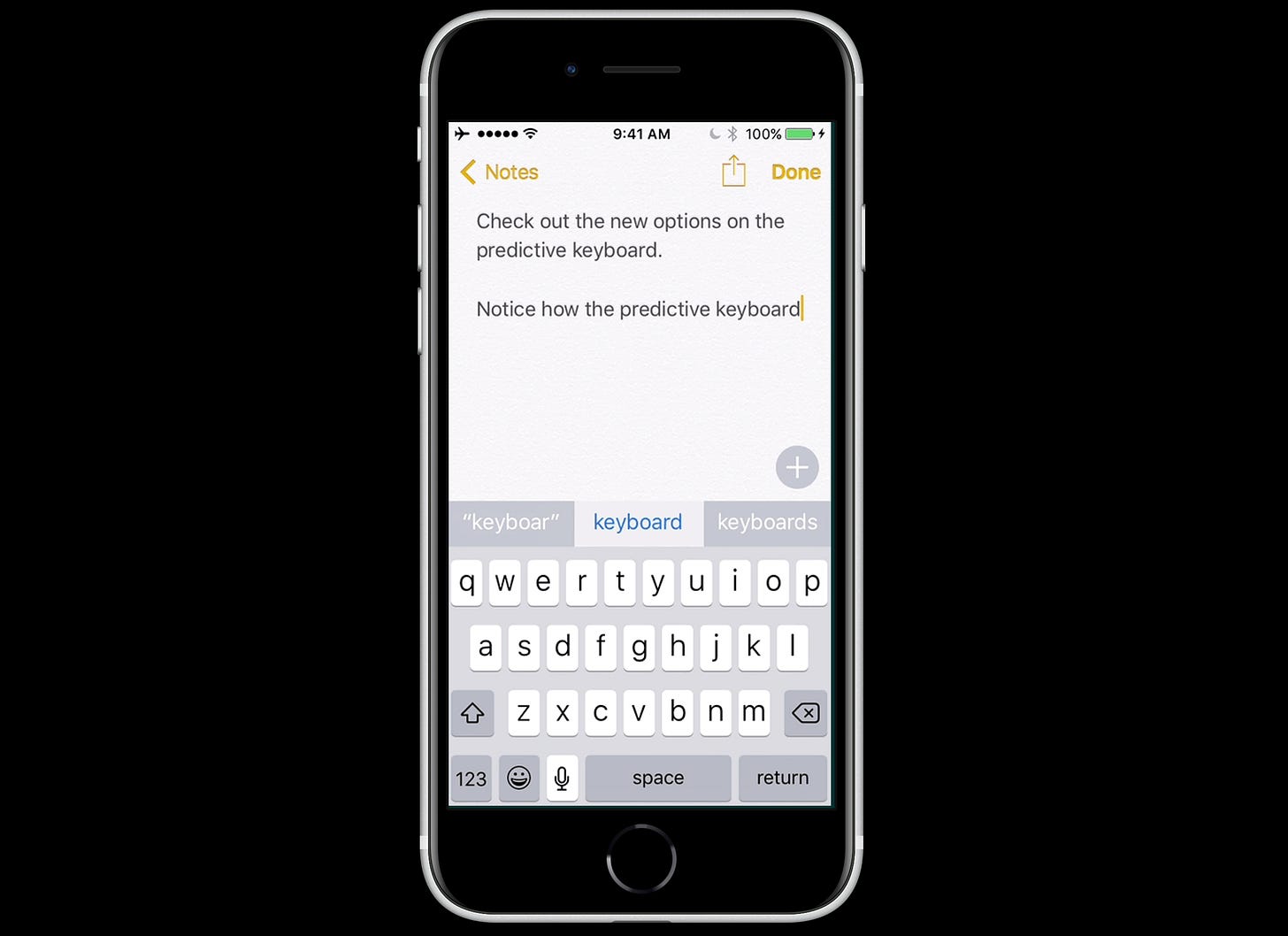

So, the model produces the sentence ‘there are lots of reasons’. This is a very, very basic example and it’s actually something we’ve seen a lot of over the past 5 years or so:

Anyone who has a modern mobile phone, whether it's On iOS or Android will have seen text prediction on their device. Text prediction is powered by a large language model that is trained to predict what the next word is that you're typing in a sentence. So these large language models have been in our pockets for years now, they just haven't had the sophistication of the large language models that we now see powering generative AI.

Summary

This brings us to the end of the first in our Classroom series where we’ve introduced Artificial Intelligence. We’ve covered a comprehensive overview of the concept and history of AI, outlined the six main branches and delved into what Generative AI is and how it works.

In the next article we’ll be covering why Generative AI has become such a hot topic recently, go into more details on what ChatGPT is and look at why this area of technology is so hyped at the moment.

Further Reading

If you’re interested in diving deeper into some of the topics covered in this article, below are some links to other interesting reads/watches/listens:

“The future is already here, it’s just not evenly distributed.“

William Gibson

This article was researched and written with help from ChatGPT, but was lovingly reviewed, edited and fine-tuned by a human.