A week in Generative AI: Seoul, ScarJo & Hallucinations

News for the week ending 26th May 2024

It’s been another busy week with the AI Seoul Summit, Microsoft Build 2024, fallout between Scarlett Johansson and OpenAI and problems with Google’s new AI summaries in their search results.

In Seoul summit, heads of states and companies commit to AI safety

Nearly six months after the inaugural global summit on AI safety at Bletchley Park in the UK, South Korea hosted the second AI safety summit. The two big headlines are the Seoul Declaration and the Frontier AI Safety Commitments.

The Seoul Declaration emphasises the need for increased international collaboration in building AI to ensure that it is ‘human-centric, trustworthy, and responsible‘. The outcome of this is that there will be a network of AI Safety Institutes (similar to the UK one) established to accelerate the advancement of AI safety science. The declaration was signed by Australia, Canada, the EU, France, Germany, Italy, Japan, Singapore, South Korea, the U.K., and the U.S. It’s a real shame to see China missing from this list.

The Frontier AI Safety Commitments see many AI companies commit to ‘not develop or deploy a model or system at all if mitigations cannot keep risks below the thresholds.‘ These thresholds will be defined with input from home governments, align with relevant international agreements, and be accompanied by an explanation of how they were decided upon.

These commitments were agreed to by all of the big frontier AI companies, including Amazon, Anthropic, Google, Meta, Microsoft, Mistral, OpenAI and xAI and Zhipu.ai (a Chinese company backed by Alibaba and Tencent). Apple are a notable omission to these commitments, but that might be because they are working on a deal with OpenAI and are likely keeping their work under wraps until WWDC on the 10th June.

Introducing Copilot+ PCs

Not to be outdone by Google I/O and OpenAI’s Spring Event last week, Microsoft hosted their Build 2024 conference this week where they introduced a huge number of new AI-related products and features. Here’s a good run down of everything they introduced.

Two of the biggest announcements were Microsoft’s Copilot+ PCs and a new feature called Recall.

Copilot+ PCs are essentially new Windows PCs that have high enough specs to take better advantage of AI models both locally on the machine and in the cloud. Some of the headline features this enables are Recall (see below), image creation and editing, applying advanced video effects, smart annotations of documents, and live captioning

As you can see in this video, Recall aims to give your Copilot+ PC a photographic memory by taking regular ‘snapshots‘ of what you’re doing. The aim of this is to create a ‘personal semantic index’ that builds unique associations between everything you do and helps you find what you’re looking for quickly and intuitively. As you can imagine, this sounds like a potential privacy nightmare and has already attracted the attention of the UK’s Information Commissioner’s Office (ICO).

To be fair to Microsoft, this does sound like a really interesting and useful feature, and they say that no data related to your ‘personal semantic index’ leaves your device and they give users fine-grained controls to delete snapshots. I think this is a good example of some of the new AI features we’re going to see in the coming months that really give us pause and might take time for users (and regulators!) to feel comfortable with.

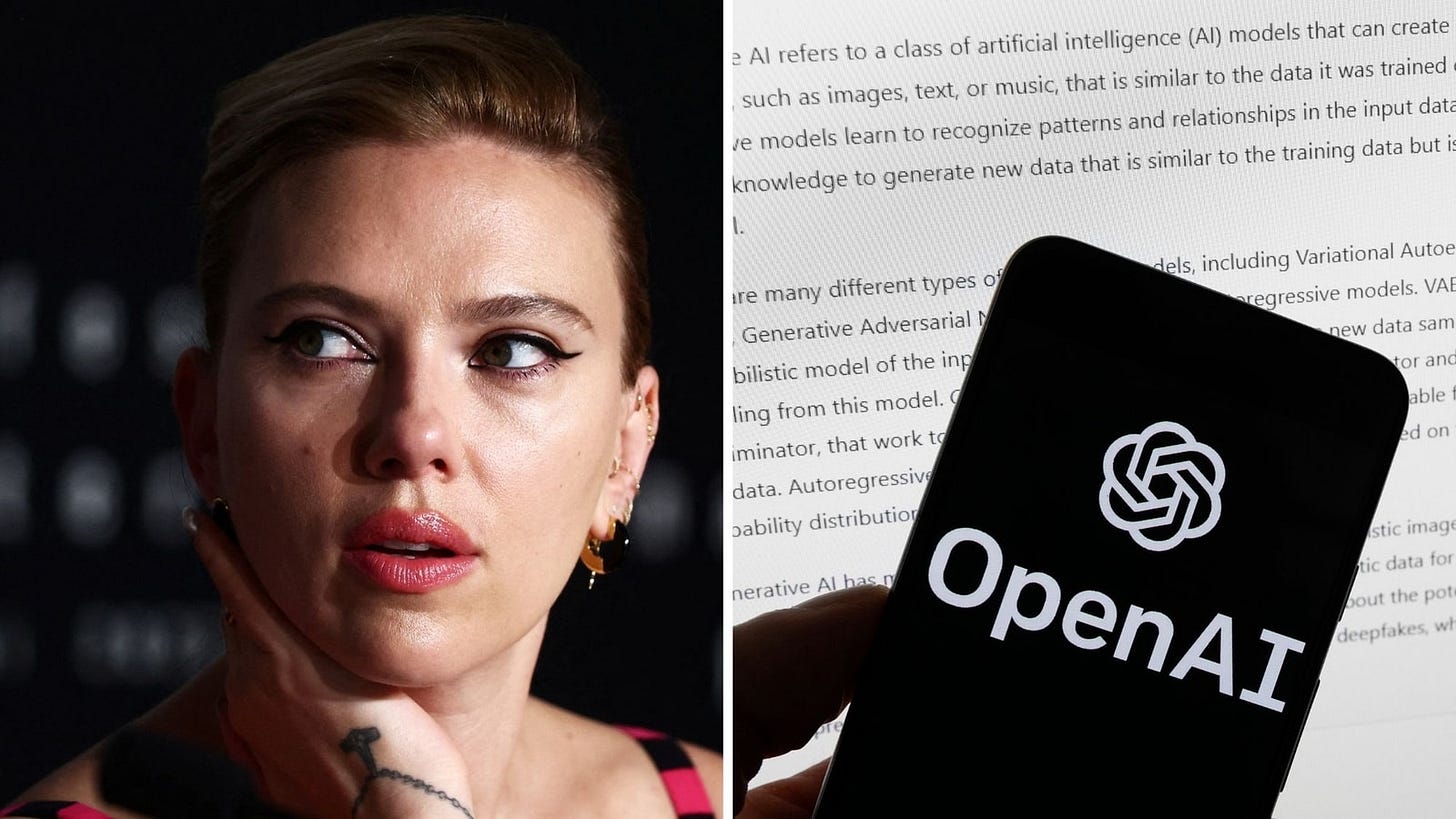

Scarlett Johannson vs. OpenAI

There’s a lot to say about the reaction to OpenAI’s spring event and their demo of ‘Sky’, the voice used in all the demos that sounds unnervingly like Scarlett Johansson.

Firstly, lawyers for Scarlett Johansson demanded that OpenAI disclosed how it developed the ‘Sky’ voice. This followed the actor releasing a statement saying that Sam Altman had asked her to voice ChatGPT, but that she declined the offer.

Following the statement, OpenAI have suspended the use of the ‘Sky’ voice and shared documents with The Washington Post that show that they hired a different actress to provide the voice.

What makes this tricky is that despite hiring a different actress to voice ‘Sky’, it was obviously inspired by the movie ‘Her’ in which Scarlett Johansson voices the AI, a film which Sam Altman has repeatedly said is his favourite movie. This also wasn’t helped by the fact that Sam Altman sent a tweet that just said ‘her’ on the day of the OpenAI spring event.

Sam Altman and OpenAI were obviously trying to emulate people’s connection with the movie ‘Her’ and Scarlett Johansson’s performance as they thought her voice would be ‘comforting to people’ who are uneasy with AI technology.

This is obviously a very tricky situation to navigate. On one hand, the actress that provided the voice for ‘Sky’ was fairly remunerated and ‘owns’ her own voice. But on the other hand, the actress was obviously chosen because her voice closely matched Scarlet Johansson’s who had previously turned down the work. There’s a good write up of this dilemma over at Emergent Behaviour, but this is just another example of how GenAI is really challenging the ways that we look at things and highlights we have a lot of issues to work through as the technology becomes more capable and is more widely adopted.

Google Search Is Now a Giant Hallucination

Following Google I/O last week, Google have released AI overviews in search results across the US and the results are not good. There is wide spread reports of hallucinations, undermining people’s trust in Google’s search results which is something we’ve seen before when Google started using generated content from Quora that claimed it was possible to melt an egg.

Some of the highlights:

Parachutes are no more effective than backpacks at preventing death when jumping from an aircraft.

According to The Journal of American Psychology, people spend 80% of their waking hours plotting revenge.

Cats may prefer to travel through a dimension that moves faster than our own.

According to UC Berkeley geologists, you should eat at least one small rock a day.

This news reinforces my views from last week that Google is rushing out new features to try and keep up with the competition and needs to do more work to turn their AI efforts into genuinely useful consumer products.

New Anthropic Research Sheds Light on AI's 'Black Box'

This is some great work by the Anthropic team, showing progress on how we can better understand what’s actually going on within large language models. Because LLMs are built to mimic how the human brain is structured I’m hopeful that progress in this field will also help us understand how the human brain works.

In the research paper, Anthropic showed how they were able to identify features in their models that represent different ideas/concepts such as ‘The Golden Gate Bridge‘ and ‘Brain sciences’. They were also able to dial up/down these features to see how it influenced the outputs of the model, which is going to be very important in controlling for bias and safeguarding the output of large language models.

AI Ethics News

International Scientific Report on the Safety of Advanced AI

Microsoft’s AI obsession is jeopardizing its climate ambitions

OpenAI Releases Former Staffers From Non-Disparagement Clauses

EU threatens Microsoft with billion-dollar fine over generative AI misinformation in Bing

Productivity soars in sectors of global economy most exposed to AI, says report

Long Reads

Stratechery - Windows Returns

Wired - It’s Time to Believe the AI Hype

Wired - AI Is a Black Box: Anthropic Figured Out a Way to Look Inside

“The future is already here, it’s just not evenly distributed.“

William Gibson