Big week! Mostly due to OpenAI releasing 5 frontier models (the GPT-4.1 family, o3, and o4-mini) in the space of a few days, but we also shouldn’t let that overshadow Google DeepMind’s release of Gemini-2.5 Flash, which I think is a huge milestone. There’s also lots of other news from OpenAI in this week’s newsletter as well as coverage of Anthropic’s new Research product and Hugging Face’s acquisition of Pollen Robotics.

The Ethics News this week is being headlined by another viral ChatGPT trend. Following the Studio Ghibli trend a month ago, people are now using ChatGPT to very successfully to reverse location searches from images, opening up a whole world of new privacy concerns.

There’s also a great Long Read from The Verge about how screenshots could be the key to great AI assistants.

Enjoy!

OpenAI has a big week

It’s been a big week for OpenAI who launched 5 new models and a dropped a host of other news. I’ll cover the model releases below, but here are a few other tidbits from OpenAI this week:

Codex CLI

Codex CLI is OpenAI’s answer to Anthropic’s Claude Code and a host of other coding agents that have really taken off in the last 12 months. I absolutely love Claude Code and it’s great to see OpenAI get in on this game. One of the biggest benefits of OpenAI’s approach is you can choose which model you can use, which allows the user to control costs much better. Claude code can get very expensive as you can only use Claude 3.7 Sonnet with it!

Hallucinations

There was an interesting report that OpenAI’s new reasoning AI models hallucinate more according to their own internal testing. Newer models are supposed to be better at dealing with this issue, as there was hope that more intelligence = less hallucinations. Turns out grounding in search results is currently the best way to reduce hallucinations, which suggests Google will have an advantage here.

Other news

OpenAI launches Flex processing for cheaper, slower AI tasks

OpenAI plans to phase out GPT-4.5, its largest-ever AI model, from its API

ChatGPT will now use its ‘memory’ to personalise web searches

To bookend the week, Sam Altman then posted the below:

It’s an interesting analogy and comparison. The Industrial Revolution was defined by a shift in productivity, but the Renaissance was about rediscovery, creativity, and human potential. I really like this framing and its optimistic tone and it’s absolutely something I think we should be aiming for.

If we let AI take us down the route of the Industrial Revolution we’ll be trying to automate everything and there will be huge economic disruption. If we’re able to steer AI down the route of the Renaissance we’ll be using AI as a catalyst for a new era of art, science, and thought. Much more exciting and hopeful!

GPT-4.1

GPT-4.1 is an interesting release from OpenAI - its the first time they’ve released a frontier model in the API only and its the first time they’ve released a ‘family’ of models, which has been something all the other frontier AI companies have been doing for a year or so now.

It’s also an interesting name, and suggests that it is a distilled (smaller) version of GPT-4.5 which OpenAI says they are already planning to retire in July, after just 5 months, as its been such a large, expensive (and unpopular) model for people to use.

So, GPT-4.1 is for developers and is meant to replace GPT-4o in the API. The mini version is a big improvement on GPT-4o mini across lots of difference domains. It’s better at coding, instruction following, image understanding, and has a larger context window of 1m tokens.

You can find Simon Willison’s thoughts on all three models here, which is always a good read.

o3 & o4-mini

The o-series models from OpenAI are Large Reasoning Models that are trained to think for longer before responding. OpenAI pioneered this approach with the release of o1 late last year, and o3 and o4-mini are the latest and greatest models in the series.

Large Reasoning Models are important because they’re the models that will power AI agents that plan, execute, and complete tasks on our behalf in the future. OpenAI claims that for the first time these two models can use a combination of different tools (like web searching, analysing data and content, and using APIs) to tackle multifaceted questions effectively. This is a big step towards models that can independently execute tasks on behalf of their user. We need Large Reasoning Models to be able to do this as its not enough for AI models to just be able to use tools, they need to be able to reason about when and how to use them too.

o3 and o4-mini can also now integrate images directly into their chain of thought for the first time. They don’t just analyse images, they can think with image too, which unlocks a new class of problem-solving that blends visual and textual reasoning.

It’s very early to see what these models are like to use, and they’re currently only available to Pro customers, but its great to see the progress being made and the new features that are being developed. A lot of progress is being made at pace!

Google DeepMind release Gemini-2.5 Flash

The announcement of Gemini-2.5 Flash flew under the radar a bit this week, partly because Google didn’t make a huge deal of it, and partly because there was so much noise from OpenAI!

I think this is a big signifiant release though - Gemini-2.5 Pro is the undisputed leading frontier model right now and the Flash version brings a lot of that capability to people at a fraction of the price. As you might be able to see from the chart above (if you squint!) - Gemini-2.5 Flash delivers a similar LMArena score of just under 1400 as OpenAI’s leading GPT-4.5 model at 99.8% of the cost! You read that right, and it’s not a typo - using Gemini-2.5 Flash costs $0.15 per 1m tokens vs. GPT-4.5’s cost of $75 per 1m tokens.

I think this goes a long way to explain why OpenAI are retiring GPT-4.5 so soon, but let’s not take anything away from Google DeepMind - this is an outstanding achievement and represents a new frontier for cost effective intelligence. This is also Google DeepMind’s first Large Hybrid Model, which combines a the capabilities of a Large Language Model with a Large Reasoning Model - essentially this means it’s great at lots of things and you can control the ‘thinking’ time the model has for more complex tasks. This is similar to how Claude-3.7 Sonnet works and I’m sure this will be a feature of GPT-5 when it releases in a few months.

Anthropic release Research and new Google integrations

Off the back of adding search capabilities to Claude a few weeks ago, Anthropic have now joined the ‘Deep Research’ bandwagon with the release of their Research product.

In true Anthropic style, they’ve done it their own way and Research is more squarely aimed at enterprise customers as it can use data from Google Workspace (emails, calendar events, documents etc.) as well as data from web searches when researching.

Research is currently only available to those on Anthropic’s new $100 per month Max subscription tier as well as Team and Enterprise customers in the US, Japan, and Brazil, so I haven’t been able to test it myself yet. It will be coming to Pro customers soon, and I’m looking forward to taking it for a test drive!

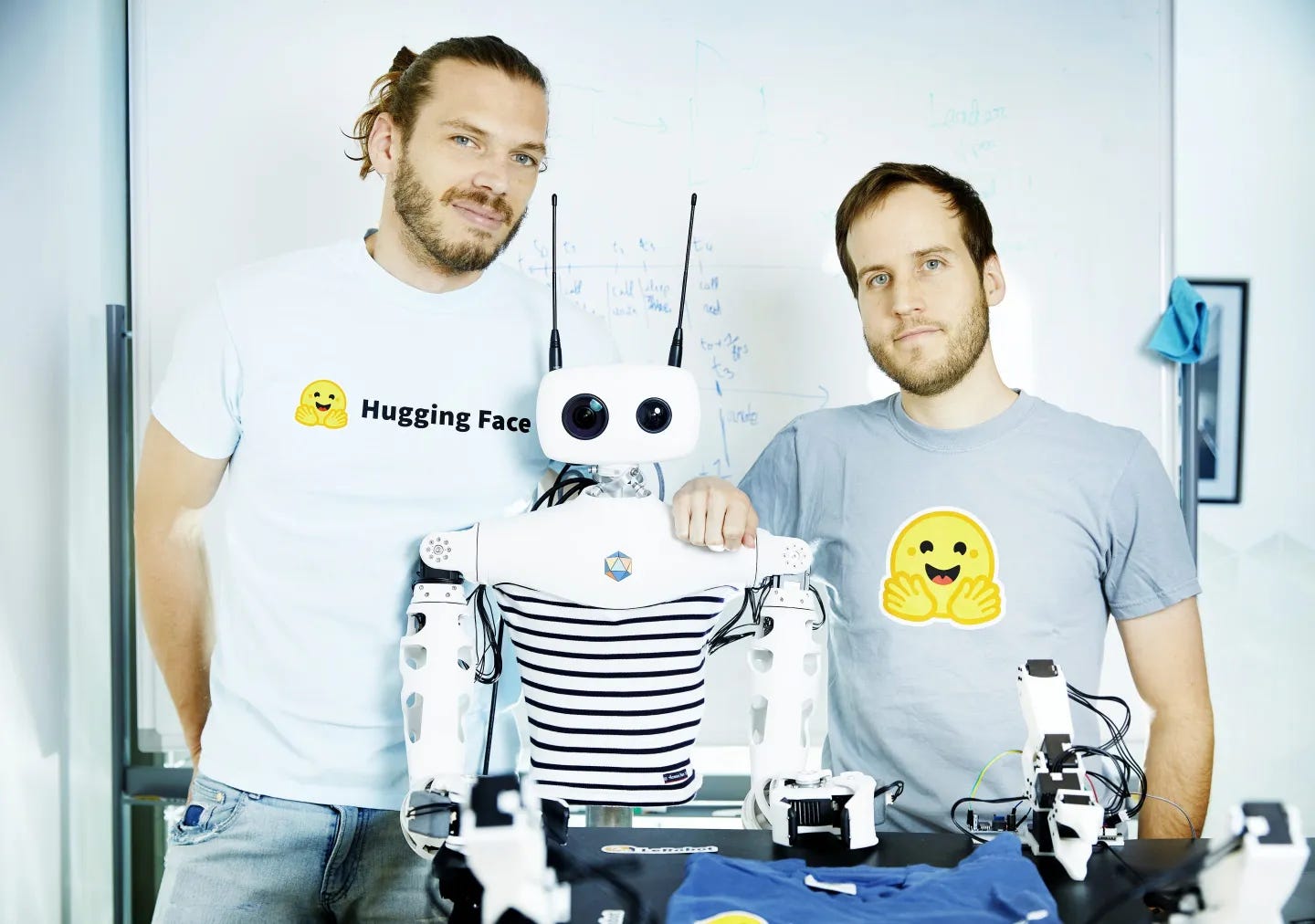

AI company Hugging Face buys humanoid robot company Pollen Robotics

This is a great investment - Hugging Face have been huge for the open AI community and if they can bring this approach and success to robotics we have a very bright future ahead of ourselves.

Robotics will be the next frontier that AI will unlock, and new AI “world models” that have an understanding of the physical world, not just the digital world, are contributing to some rapid progress in robotics right now. If Hugging Face can offer an open source alternative to the work Nvidia are pioneering then we’re only going to see that progress accelerate.

AI Ethics News

The latest viral ChatGPT trend is doing ‘reverse location search’ from photos

Access to future AI models in OpenAI’s API may require a verified ID

Microsoft researchers say they’ve developed a hyper-efficient AI model that can run on CPUs

Google’s latest AI model report lacks key safety details, experts say

OpenAI partner says it had relatively little time to test the company’s o3 AI model

Long Reads

The Verge - The humble screenshot might be the key to great AI assistants

Ars Technica - History of the Internet, Part 1

“The future is already here, it’s just not evenly distributed.“

William Gibson