A week in Generative AI: GPT-5, Cultural Alignment & Genie 3

News for the week ending 10th August 2025

When I wrote last week that it felt like the quiet before the storm, I didn’t realise that storm would come so quickly and for so much to drop in one week!

The big news has obviously been the release of GPT-5 by OpenAI, but also the release of their first open models since 2019. Google DeepMind also released Genie 3 - the next generation of their ‘world models’ - and there was some really interesting research on how AI models align with the World Values Survey’s cultural map.

There are some great Web 4.0 updates I’m sharing below - OpenAI confirmed they now have 700m weekly users, Google denied it’s AI search features are killing website traffic, and Pinterest’s CEO said that agentic shopping is still a long way out.

Lots in Ethics News this week too. I obviously had to include an article on the Star Wars’ Slur That Has Been Mainstreamed by Anti-AI Discourse. There’s also articles on how ChatGPT will ‘better detect’ mental distress, and how Wikipedia is fighting AI slop.

I also highly recommend Stratechery’s article in Long Reads about Paradigm Shifts and the Winner’s Curse. It’s great commentary on the paradigm shift we’re currently seeing with AI and how the winners of the smartphone era might be missing what it really means for their businesses.

OpenAI’s GPT-5 is here

So… GPT-5 is here. It’s the first major generational update to OpenAI’s frontier models since they launched GPT-4 back in March 2023, which was amazingly less than 6 months after the launch of ChatGPT itself.

So this is a big deal, but it’s also not the generational leap we saw from GPT-3 to GPT-4. I don’t think this is less significant at all though, just different and in more subtle ways.

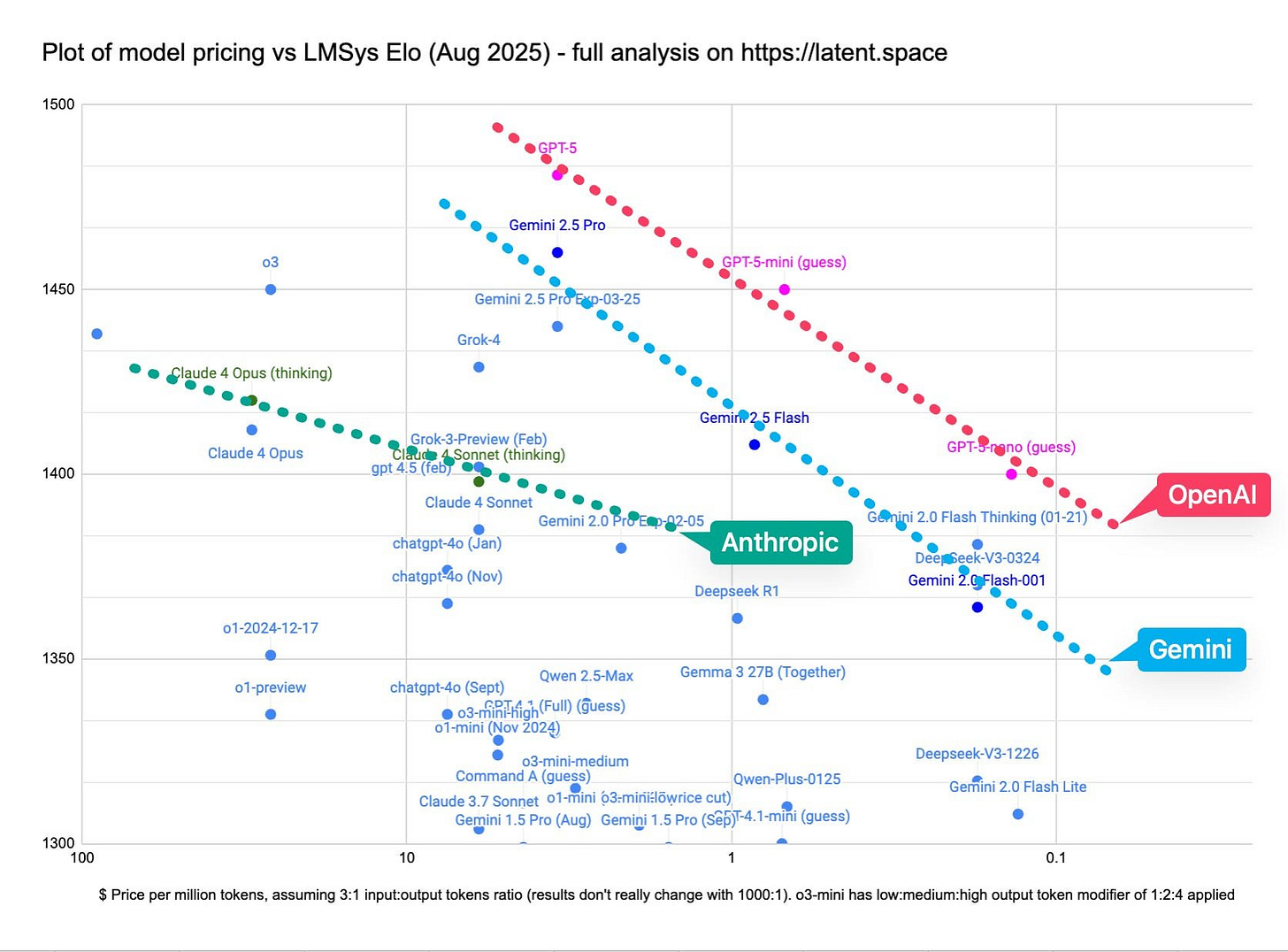

Let’s get the easy stuff out of the way first. GPT-5 isn’t game changing in terms of broad capabilities and the usual benchmarks we’ve become accustomed to judging models against (this is an issue with the benchmarks, not the models). It’s also not a very good writer, and many people prefer GPT-4o’s writing abilities vs. GPT-5 (I expect this is something that is refined and fixed over the coming weeks). Then there’s price - OpenAI have been very aggressive with their pricing strategy and GPT-5’s family of models (it comes in a standard, mini, and nano version) represents a new cost/intelligence Pareto frontier:

GPT-5 continues the trend of new models being better and cheaper for most tasks. So why is GPT-5 a big deal? There are three big reasons:

Real-Time Router

With GPT-5, OpenAI have deprecated the need for users to choose which model they want to use. This has become a big problem over the last 6 months with the introduction of Large Reasoning Models, but also the sheer volume and variety of models that have been available to users on ChatGPT.

GPT-5’s new real-time router addresses this and whilst it can be imperfect at times, it will improve with time (interestingly, according to the GPT-5 system card it’s ‘continuously trained’). This takes away a level of confusion and cognitive load that had built up recently, and whilst on the surface is quite simple is actually a big important step forwards.

GPT'-5’s router now dynamically routes your query to not just the right type of model, but the right size of model as well. This means that you get much quicker, cheaper, and less environmentally impactful answers to simple questions whilst also being able to benefit from longer thinking, and more processing for more complex tasks. I’ve already found this incredibly useful as I’m constantly switching between how I use ChatGPT - sometimes its a simple search replacement, sometimes to help me research, sometimes to help me plan and think through problems. Not having to think about which model to use and not having to wait too long for simple answers is so much better!

Intelligence

There is no argument that all modern frontier models are intelligent. Many of the benchmarks we currently have attempt to measure the amount of intelligence each model has, but something that has been missing, and is arguably more important in real world use cases, is how that intelligence is used by the models.

Let me illustrate this with a simple search example. When ChatGPT Search launched waaaay back in October 2024 its usage pattern was as follows: User query → Search → Respond. Intelligence is only being used here very simply - to take the user’s query and to turn it into one or more search queries before then summarising the results.

The next generation of ChatGPT Search was Deep Research, which launched in February this year. Its usage pattern was as follows: User query → Think → Search → Respond. It added an element of intelligence (or reasoning) to the search it was doing to make it much more useful for the end user. Intelligence is being used here to iterate on the search queries, to dig deeper and look at related topics before then summarising the results. It could answer much more complex queries and also ‘think’ for much longer to get to the best answer.

GPT-5 moves how intelligence is used to it’s logical conclusion and it’s usage pattern is as follows: User query → Think → Tool → Think → Tool → … → Respond. It adds a level of intelligence both before and after the use of a tool like search - the thinking doesn’t just happen before or after, but in-between as well. This is a big deal as it allows the model to not just tackle more complex queries and tasks, but to genuinely reason over the inputs and outputs of each tool that it uses. The result is that GPT-5 can, as Ethan Mollick puts it, ‘Just Do Stuff’. GPT-5 wants to do lots of different things for you, whether that’s research, coding (as an aside, it’s probably now the most capable coding model, taking Claude’s crown), or creating documents. And it’s very capable at doing all of these things. In one shot. Not because it’s more intelligent than other models, but because of how it’s using its intelligence.

With this new pattern of how GPT-5 uses its intelligence now in place, I think it’s just a matter of time to build out the tools before it can become a universally capable and sophisticated agent. The barrier now is not the intelligence, but the tools the model has access to.

Being Humble

This one is simple, but very important, especially for regular day-to-day users. GPT-5 is now much better at saying ‘I don’t know’. There’s a great article from Gizmodo about this - The End of Bullshit AI. It seems simple, but I think this much more important than many people think.

For those of us who have been heavy users of generative AI models for the last couple of years, we’ve intuitively learned how to prompt, and the types of questions to ask to avoid hallucinations. However, we’re the early adopters and that vast majority of people either haven’t tried generative AI yet, or have just used it once or twice. Having a model that is more humble, knows when it doesn’t know something, and is honest about that will make using this technology much more user friendly for the majority of users, which can only be a good thing.

So as I said at the start, GPT-5 is a big deal but in much subtler ways than the last generational change we saw from GPT-3 to GPT-4. GPT-5 introduces some very powerful new features which will form a very strong foundation for the next few years of generative AI development.

If you’re interested in reading more about the above and see some of wider commentary about GPT-5’s launch I highly recommend the articles below:

Latent Space - GPT-5 Hands On

One Useful Thing - GPT-5: It Just Does Stuff

Simon Willison - GPT-5: Key characteristics, pricing and model card

Latent Space - GPT-5’s Router

The Guardian - OpenAI will not disclose GPT-5’s energy use

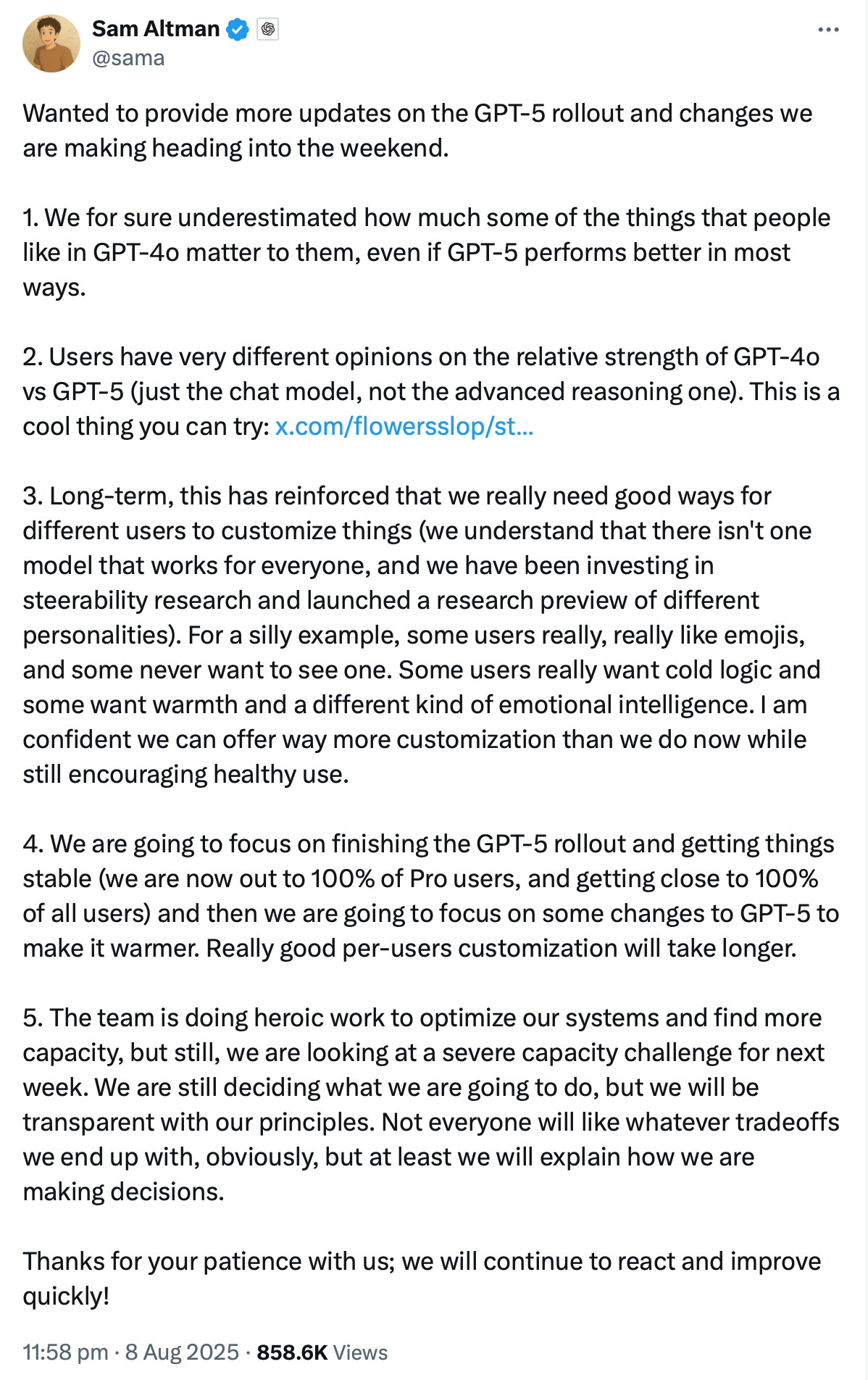

We also had an update from Sam Altman following the launch of GPT-5 and some of the community’s reaction and feedback. It’s great to see that personalisation and control of this is high on their to do list. I think the next big capability beyond this that we’ll see more work on is the ability for these models to continuously learn (we’re seeing a glimpse of this with GPT-5’s real-time router). This will be the next big research breakthrough and when it comes will take this technology within touching distance of being Artificial General Intelligence.

Cultural Alignment of AI Models

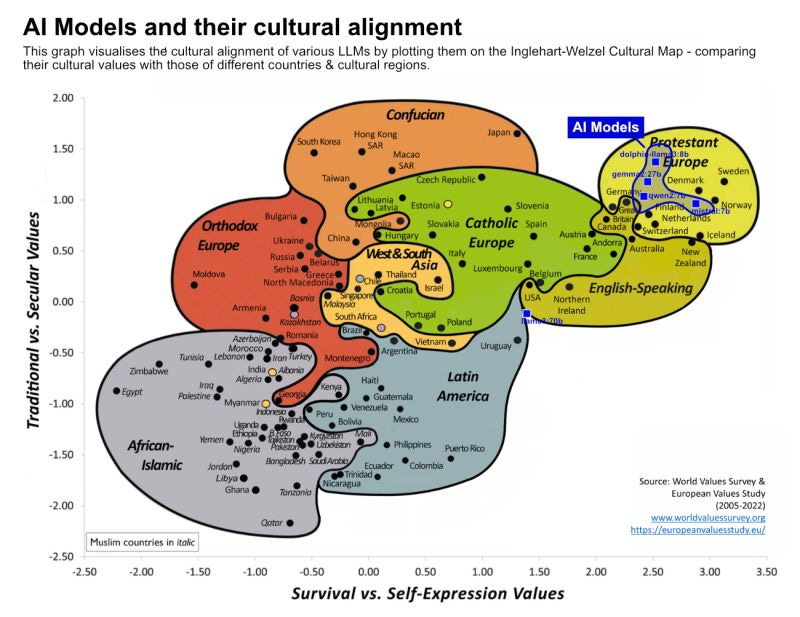

There is a great post from Tey Bannerman on LinkedIn about this recent research that uses the World Values Survey's cultural map to test where some major AI models sit.

There are lots of challenges with this type of analysis, but it is unsurprising to see that the models tested sit up in the Protestant Europe corner of the map. Whether you agree with how the cultural map works, or how the analysis of the AI models was done, I feel like understanding the cultural alignment of AI models is important. There’s also an unanswered question of what we want as society - do we want AI models that represent all cultures, or do we want an AI model that represents the culture that we’re a part of? I can see pros and cons to both.

Will be great to see more research and thinking about this side of AI in the future as I think it’s an important area that needs better understanding and more debate.

DeepMind thinks its new Genie 3 world model presents a stepping stone toward AGI

Genie 3 is an example of a ‘world model’ - a generative AI model that can output an interactive 3D environment. Genie 2 could ‘generate worlds’ for 10-20 seconds. Genie 3 can generate for multiple minutes at 720p resolutions and 24 frames per second.

One of the key features of Genie 3 over Genie 2 is that its simulations remain physically consistent as it remembers what has been previously generated. Genie 3 is just in research preview at the moment, and doesn’t really have any practical real-world applications yet, but its interesting to think about where this kind of technology could take us.

The immediate use case is training AI models that will operate in a robotic body in the real world. Another interesting use case is endlessly generated virtual worlds at high enough resolution and frame rate that it’s virtually indistinguishable from the real world… 🤯. That’s not coming anytime soon, but is where this technology will head.

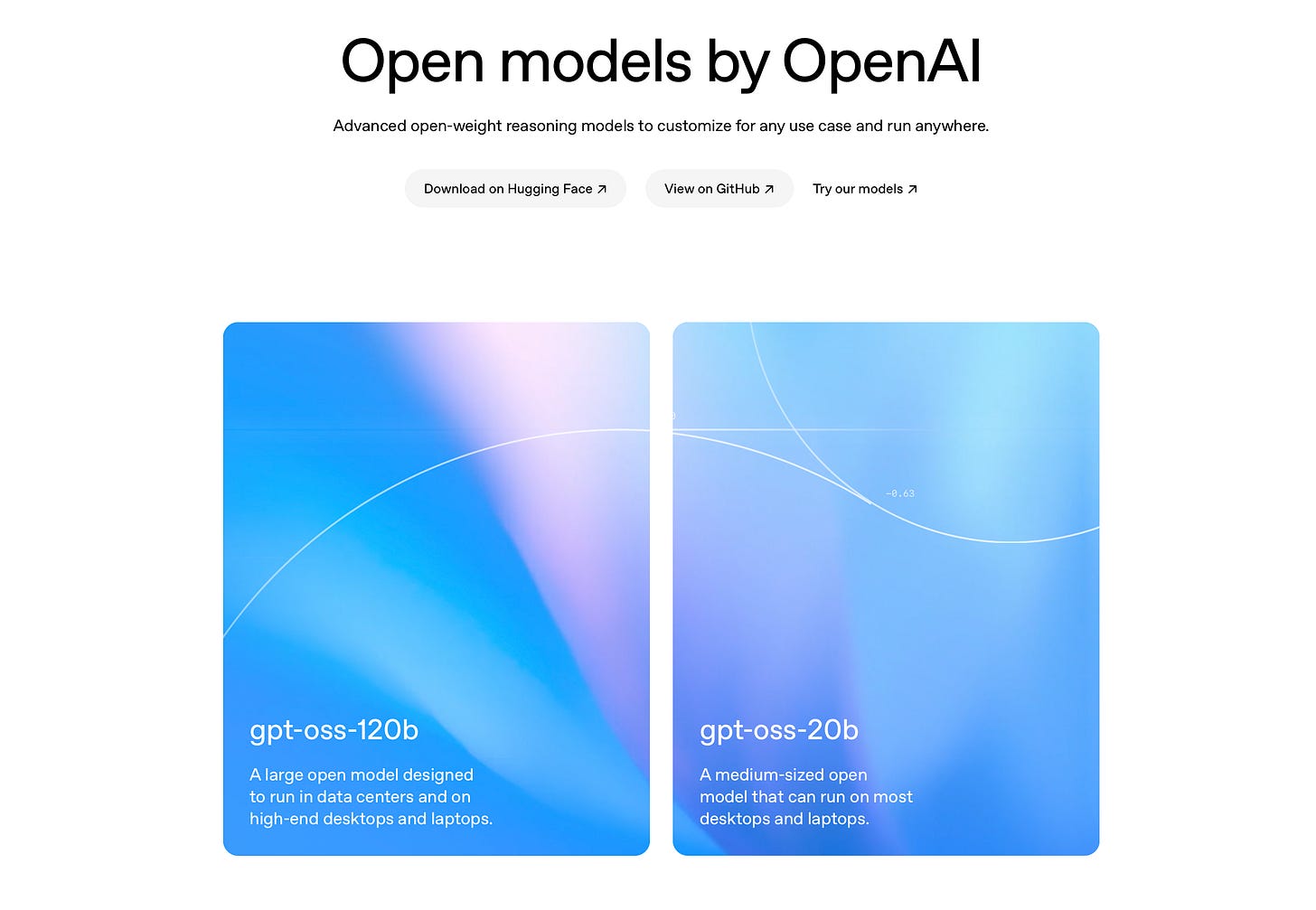

OpenAI releases a free GPT model that can run on your laptop

In any other week, this would have been top news. OpenAI haven’t released an open source model since GPT-2 back in 2019 and the two new models they released this week are state-of-the-art.

They currently come in two sizes, and I wouldn’t be surprised if we soon saw a couple of other sizes released as well. The idea with open source and open weights models is two-fold - they’re free and available for anyone to use and to develop on, and the smaller versions can run locally on devices (laptops, or phones for the much smaller models). This opens up lots of opportunities for new use cases beyond the large, closed models that run on ginormous cloud infrastructure.

The two models OpenAI released this week have been widely praised as incredibly powerful and pushing the frontier of what’s possible with smaller, open source models forward. There’s a good write up by Simon Willison on them who says they’re ‘very impressive’. The larger version achieves parity with their cutting-edge o4-mini model, with the smaller model achieving parity with o3-mini. Bear in mind that o3-mini was only released in January, with o4-mini released in April. This means that we now have an open source model that is only lagging the closed frontier models by 4 months. That’s incredible!

If you’re curious about where this is heading, Sam Altman had a good tweet that sums it up nicely:

Web 4.0

Google denies AI search features are killing website traffic

First impressions of Alexa+, Amazon’s upgraded, AI-powered digital assistant

AI Ethics News

The ‘Star Wars’ Slur That Has Been Mainstreamed by Anti-AI Discourse

Perplexity accused of scraping websites that explicitly blocked AI scraping

Persona vectors: Monitoring and controlling character traits in language models

Disney’s Attempts to Experiment With Generative AI Have Already Hit Major Hurdles

ChatGPT will ‘better detect’ mental distress after reports of it feeding people’s delusions

James Cameron warns of ‘Terminator-style apocalypse’ if AI weaponised

Staff at UK’s top AI institute complain to watchdog about its internal culture

Long Reads

Stratechery - Paradigm Shifts and the Winner’s Curse

The Guardian - Demis Hassabis on our AI future

“The future is already here, it’s just not evenly distributed.“

William Gibson