A week in Generative AI: Gemini 2.0, Deep Research & Robots

News for the week ending 9th February 2025

This week we’ve seen a huge amount of news, most of it driven by the continuing fallout of the released of DeepSeek’s models a couple of weeks ago. There was the release of the whole family of Google DeepMind’s Gemini 2.0 models, as well as the release of OpenAI’s Deep Research model. Both will have big implications going forwards.

OpenAI also announced that they were making o3-mini’s though process more transparent, with a fantastic example of this thinking shared by Sam Altman. There was also some excellent analysis by latent.space on the cost-intelligence frontier of generative AI models. We’re seeing the cost of intelligence trending to zero and falling rapidly. There’s also some fun robot videos from NVIDIA and Apple, because why not?!

In Ethics News there is a report on why employees smuggle AI into work, some examples from TikTok of how Deepfakes are getting shockingly good and an update from Google DeepMind to their frontier safety framework.

In Long Reads there’s a great article from Ethan Mollick on The End of Search, The Beginning of Research and if you have a spare 3 hours, a fantastic video tutorial from Andrej Karpathy on how large language models are built and work.

I also mention Ethan Mollick 5 times in this week’s newsletter and link to a lot of the commentary and content he’s been producing this week. If you’re not subscribed to his One Useful Thing blog and following him on his various socials, you’re really missing out. This week it feels like I’m getting all my ideas from him!

Gemini 2.0 is now available to everyone

The big news of the week was the release of Google DeepMind’s Gemini 2.0 to everyone. This follows an experimental version of Gemini 2.0 Flash being released in December, but we now have the full family of Gemini 2.0 models. The table above is a useful reference to see the difference between the models. There are lots of great features and quality of life improvements across the whole family.

Gemini 2.0 Flash is available to all users but 2.0 Pro Experimental is only available to Advanced subscribers. There’s also a Flash-Lite and a Flash Thinking Experimental model. Don’t worry, it’s not you - I find all these model naming conventions incredibly confusing and hard to keep track of too!

2.0 Pro Experimental is a very impressive model. Ethan Mollick claims it’s the first GPT-5 class model with wide public release. He shared a map of western Europe with all castles marked, which the model correctly identified. 2.0 Pro Experimental is the first model to get this right - I tested with GPT-4o, o1 (o3-mini can’t analyse images), and Claude 3.5 Sonnet and none of them got it right, with them all thinking it was a map of population or infrastructure.

One test alone doesn’t show that 2.0 Pro Experimental is a next generation GPT-5 class model and only time will tell if this is really a generational jump in capability.

Potentially the bigger news is 2.0 Flash-Lite which is Google DeepMind’s most cost-efficient model yet, no doubt driven by the cost efficiencies seen with DeepSeek. It’s currently the leader in price-performance across all generative AI models available, something I get into more below.

OpenAI unveils a new ChatGPT agent for ‘deep research’

It’s rare for OpenAI to release something on a Sunday night, but that’s exactly what they did, announcing Deep Research just a few hours after last week’s newsletter went out! I suspect that this was one of the releases that Sam Altman said they ‘move up’ in response to the waves DeepSeek was making a couple of weeks ago.

OpenAI’s Deep Research is their version of Google DeepMind’s…. Deep Research that they released back in December. It’s a specialised digital AI agent that can work for you independently to research a specific topic. It will find and analyse hundreds of online sources related to the topic you want it to research and write a comprehensive report, which OpenAI claims is at the level of a research analyst (which is confirmed by people who’ve been able to test it - see below).

Deep Research is powered by OpenAI’s o3 model (not o3-mini, and which hasn’t been released yet) and uses the model’s reasoning capabilities to plan, search, interpret, and analyse text, PDFs and images it finds on the internet. It’s only available to Pro users currently (the $200 per month tier) and isn’t available to users in the UK, Switzerland or the European Economic Area. It will be coming to Plus and Team users soon, with Enterprise users to follow afterwards. Because of this, I haven’t been able to test it myself yet, but the feedback from those that have has been incredibly impressive. Below are a few interesting things that have surfaced this week amongst those that have been able to test Deep Research:

Ethan Mollick shared a post showing how Deep Research basically went through the same learning journey as he did in the first year of studying for his PhD, although did it in just a few seconds.

Tyler Cowen, an Economics Professor, thinks that Deep Research is like having a good PhD-level research assistant but it can compress what they do from 1-2 weeks into minutes.

AI Explained found that Deep Research performed much better than Google’s version and DeepSeek R1, although tended to ask lots of clarifying questions.

Ethan Mollick again says Deep Research is good at doing research that gives you a new perspective on a topic and can help inform your own thoughts.

In summary, OpenAI’s Deep Research is very capable and impressive. It’s what I would classify as a ‘Level 3’ semi-autonomous specialised digital AI agent (more on this in my opinion piece on Web 4.0 and the rise of the Agentic Web, coming later this week). Deep Research might be a specialised, narrow AI agent but it’s already creating economic value by significantly reducing research time across a broad range of topics. I’d definitely say this is an important step towards Artificial General Intelligence that would have been hard to imagine 2-3 years ago.

OpenAI now reveals more of its o3-mini model’s thought process

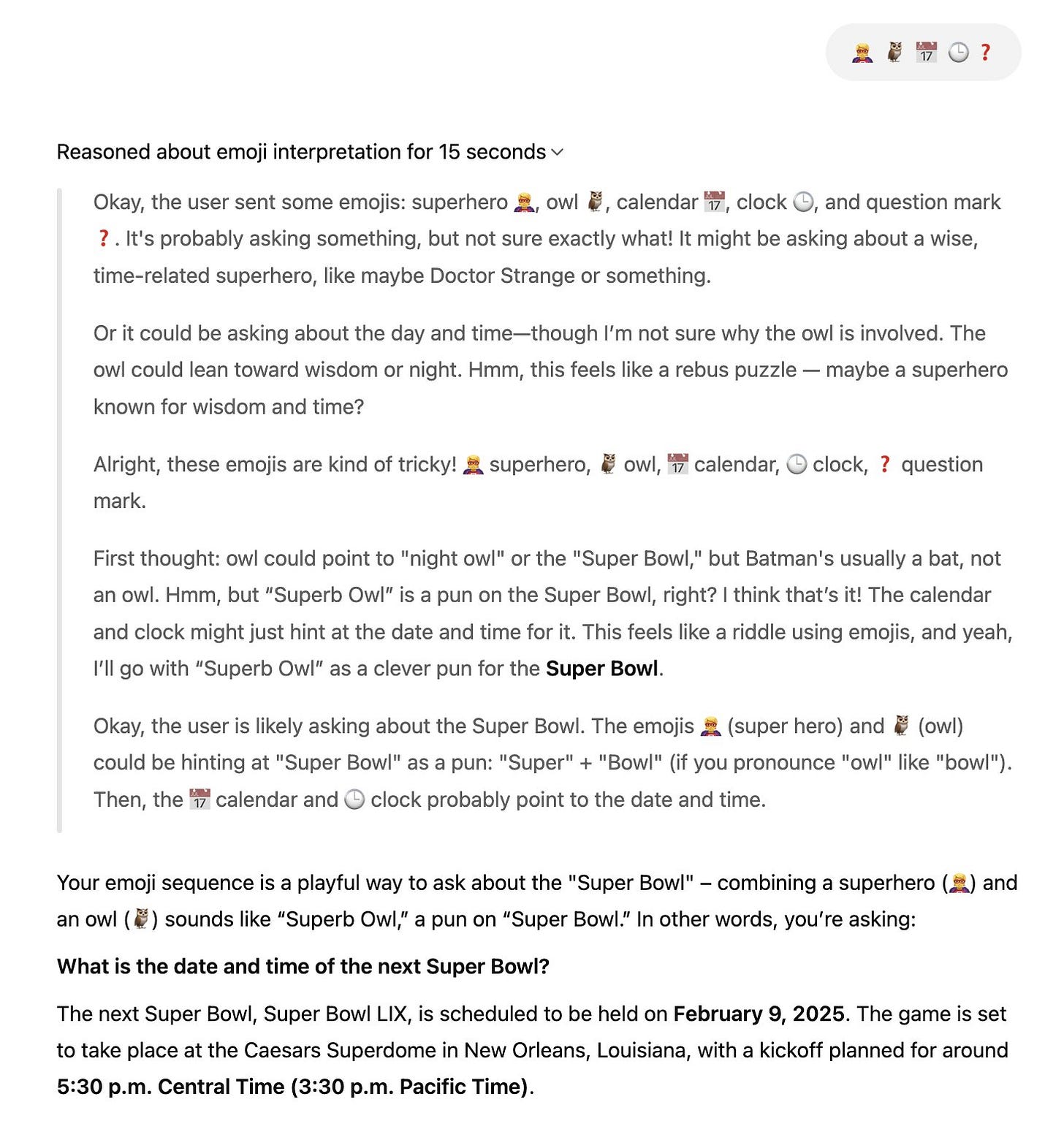

In and of itself, OpenAI updating how they display o3-mini’s thought process isn’t much to write home about. However, the example that Sam Altman used in his post about this update was too good not to share and comment on.

In the above example, o3-mini is just given the following prompt “🦸🦉📅🕒❓“ and the image above shows its thought process. I think this is some incredibly impressive reasoning! I wouldn’t have been able to work out this riddle without a lot of thinking time, and even then I’m not sure I could have got it.

I was concerned that o3-mini solving this emoji-riddle might be too good to be true, so I decided to test it out myself. It took 4 attempts in 4 fresh chats for it to figure it out, but when it did it gave a great answer. It also took 31 seconds to reason - far quicker than I would have been able to solve it. You can see my results here.

It’s taking a bit of time for people to get their heads around these new reasoning models, work out what they’re for, and find good ways to use them. I hope this example helps the penny drop for many of you - they’re incredibly intelligent models that most people just haven’t found the killer use case for yet.

Let’s see if you can solve this one - 📻🙂🔺🎶. o3-mini got it first go with 12 seconds of reasoning….

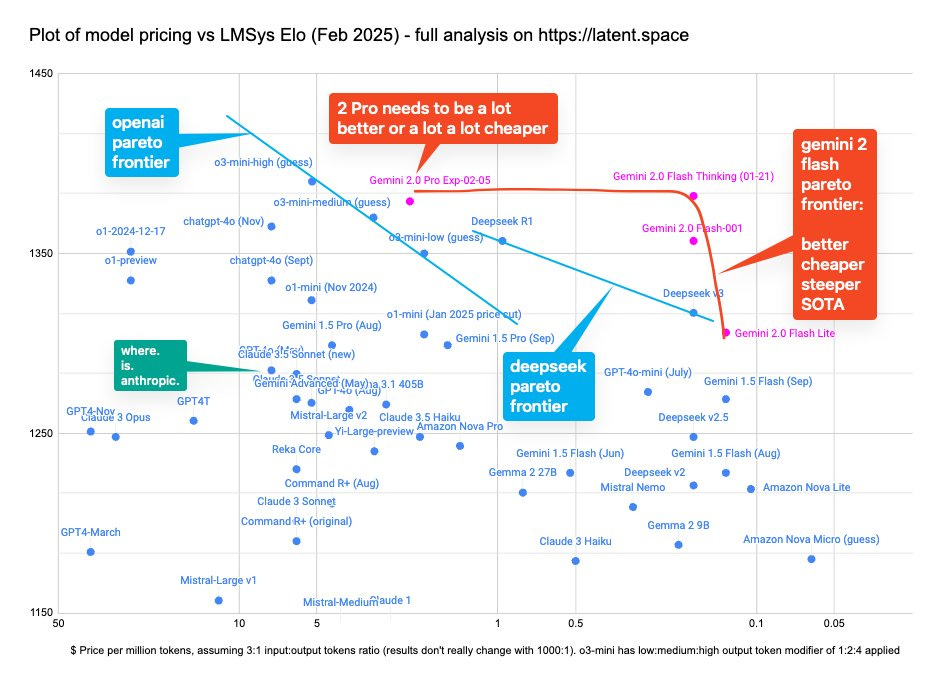

The costs of intelligence are dropping quickly

The chart above is very busy, but summarises some fantastic work by the team at latent.space. It attempts to answer a question I’ve long wanted answered which is “What is the trade off between cost and intelligence?” Just focus on the coloured lines, which represent the cost-intelligence frontier for three AI companies - there’s one for OpenAI, one for DeepSeek and one for Google DeepMind.

There are a few important things to take away from this analysis:

Gemini 2.0 Flash Thinking is currently the leader in cost-intelligence - it delivers the lowest cost to intelligence ratio, even cheaper than DeepSeek R1

We’re (arguably) still in the GPT-4 era of models and the analysis shows that the cost of that level of intelligence has dropped 1,000x in the last 18 months.

For reference Moore’s Law predicted a 2x increase in transistor density every 2 years…. the pace and progress we’re seeing on intelligence right now is astounding.

The intelligence market has obviously adjusted very quickly post the reveal of DeepSeek. In the last couple of weeks we’ve seen significant price drops from both OpenAI and Google to remain competitive.

To put this in context, Ethan Mollick did some analysis on the AI ARC-AGI challenge that o3 made huge waves about when it scored 88% late last year. In the last 3 months we’ve see OpenAI’s o1 get 32% on the benchmark at a cost of $3.8 per task, DeepSeek’s R1 get 15.8% at a cost of $0.06 per task and most recently we’ve seen OpenAI’s o3-mini get 35% at a cost of $0.04 per task. We don’t know what the cost of o3 will be yet, so it will be interesting to see how it compares to these costs when it’s released.

For me, there are two really exciting things happening in the AI industry right now. The first is that we’re really pushing the frontier of the I in AI - we’re building models that are getting much more intelligent and that’s happening really quickly.

The second, and probably more importantly, is that the cost of this intelligence is dropping rapidly and trending to zero. Today you can generate a relevant one-line caption for around 40,000 unique photos with Gemini 2.0 Flash-Lite for less than a dollar. With costs dropping at a rate of 1,000x in 18 months, imagine what we’ll be able to do for a dollar in a years time. Imagine what we’ll be able to do in 2 years time, or even 5 years time.

We’re rapidly approaching the cost of human-level intelligence essentially being zero. This will have profound implications for how we view intelligence, how we use intelligence, and what that will mean for the society. I don’t have any insights or answers to share with you, but it is going to be a fun ride over the next few years!

Robots mimicking real world athletes

We’ve been used to seeing real world robotics platforms (not the movie versions!) moving in unnatural ways or moving at a fraction of human speeds, so its great to see NVIDIA make progress on robotics that can move at human speeds and in human-like ways.

The video above shows a robot shooting a hoop like Kobe and celebrating like Cristiano. The robots are first trained in simulation and then the controlling AI model is “patched up“ in the real world to make it more reliable.

Apple built Pixar’s robot lamp

This research project was obviously inspired by Pixar’s famous lamp and explores how robots can be expressive which in turn makes it more engaging to humans than a standard robot.

Rumours are that we’ll first see this ‘robotic’ tech see up in a HomePad product for the home, which is essentially an iPad on a robotic stand. It’s great to see Apple working on robotics as I think their design heritage can bring a lot to how robots fit into the home and everyday life, exactly like this research they’re doing!

AI Ethics News

Google drops pledge not to use AI for weapons or surveillance

Keir Starmer unveils plan for large nuclear expansion across England and Wales

Long Reads

One Useful Thing - The End of Search, The Beginning of Research

Andreesen Horowitz - Setting the Agenda for Global AI Leadership: Assessing the Roles of Congress and the States

Andrew Karpathy - Deep Dive into LLMs like ChatGPT

Techcrunch - Tesla’s Dojo, a timeline

“The future is already here, it’s just not evenly distributed.“

William Gibson