No big frontier-moving news from any of the major generative AI companies this week, but lots of interesting little tidbits. So, I’m taking a slightly different approach to covering this week’s news and focusing on each of Google, OpenAI, and Anthropic and all the news they’ve dropped.

In ethics news there’s been some good articles on the environment with a new tool from Hugging Face that estimates the energy usage of your chats as well as a study on future data centre costs that estimates that they are becoming 1.34x more energy efficient every year.

I also highly recommend the two posts that Simon Willison has posted this week in Long Reads - there’s one on how good OpenAI’s o3 is at guessing where an image is taken from and also one on how o3 is now very reliable at AI-assisted search. Well worth a read!

Google

There’s a really interesting rivalry developing between Google’s 2.5 Pro and OpenAI’s o3 models, the two models right on the frontier of capability right now. 2.5 Pro tops the Chatbot Arena leaderboard, but o3 has taken the lead in the FictionLive benchmark that tests working with large amounts of text that Google has been dominating. AI Explained has a great video comparing the two models that’s worth checking out.

Google also released some interesting new information as part of Alphabet’s Q1 2025 earnings call. AI overviews now reach more than 1.5 billion people every month (but I still think there are some hallucination issues to address).

Lastly, Google stated in a recent court filing that Gemini now has 350m active users. They also showed a slide estimating that ChatGPT has 600m weekly active users. It was only two weeks that Sam Altman announced that they had 500m weekly active users in a Ted Talk. As a reminder, ChatGPT only surpassed 300m weekly active users in December, and 400m weekly active users back in February. It certainly feels like consumer adoption of frontier generative AI models is accelerating this year!

Source

OpenAI

There were lots of little news drop from OpenAI this week as well. The biggest announcement is that they’ve now made their new viral image generation model available in the API. This is allowing other applications to use their model and for developers to be able to generate large volume of images programmatically. This might lead to a big increase in AI slop on the internet, but at least its better slop than we had a few weeks ago!

OpenAI also announced a ‘lightweight’ version of their Deep Research tool for free users. If you haven’t had a chance to use a Deep Research tool from OpenAI, Google, or Anthropic yet I really encourage you to give this a go. It’s amazing how quickly it can research anything for you online and I’m sure we’ll find lots of use cases for this going forwards.

In some other smaller news, OpenAI said they’ be interested in buying Google’s Chrome browser if the company was broken up. This completely plays into OpenAI’s current strategy with Sam Altman increasingly talking about themselves as a product company and not an AI research company. It’s not secret that Sam Altman wants OpenAI to become the largest consumer digital platform and he’s clearly gunning to take Google’s crown.

This might come sooner than people realise, as there was also a report this week that ChatGPT search, that directly competes with Google, is growing quickly in the EU. They’re currently serving 41.3m average monthly users with their search product, up from 11.2m in October, so it’s grown by 3.5x in 6 months.

Lastly, Sam Altman admitted that people being polite to ChatGPT was costing them tens of millions of dollars, but he thinks it’s money well spent!

Source

Anthropic

We also had some interesting comments and news come out of Anthropic this week. We had a piece published by Dario Amodei, their CEO, about the urgent need to understand how models work, and the company warned that AI employees are probably only about a year away.

Jason Clinton, Anthropic’s CISO, believes that we will see AI agents in the next year that have their own memories, their own roles in companies, and have a level of autonomy that far exceeds what AI agents have today. He’s focused on figuring out what this might mean from a corporate security perspective - do these AI Agents need their own individual corporate accounts and passwords, what kind of network access should they be allowed, and who should be responsible for managing its actions?

Anthropic also announce that they’re launching a new program to study AI ‘model welfare’. This is a research program to determine whether the ‘welfare’ of an AI model deserves moral consideration and whether the models can suffer from distress. There are still disagreements over how much we should anthropomorphise AI models, but regardless of people’s beliefs I think looking at these kind of things will only help us what’s going on inside these models and can only be a good thing.

Source

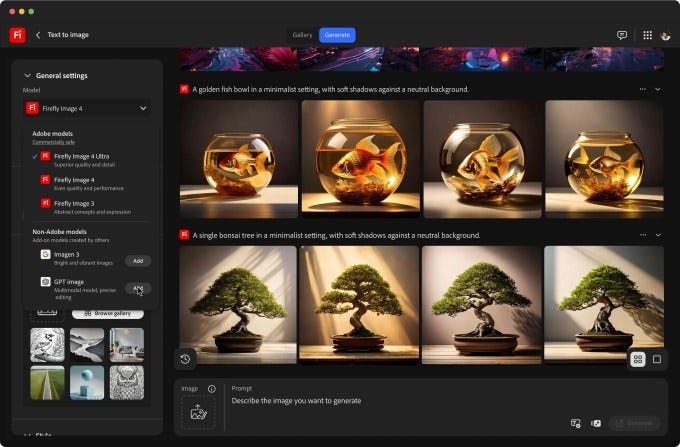

Adobe releases new Firefly image generation models

These new image generation models from Adobe aim to compete with OpenAI’s new image generation model. Image Model 4 (not the most creatively named!) is positioned as an everyday model while the ‘Ultra’ variant is when you need more detail and realism in your images.

Adobe’s 4th generation models improve on the 3rd generation in terms of quality, speed, and control over things like structure, style, camera angles, and zoom.

Firefly has become a really interesting platform for Adobe now as its positioned as a ‘commercially safe’ creative AI solution that hosts image and video generation capabilities using its own models and other third party models (like OpenAI’s image generating models). It will soon be integrated into Adobe’s wider Creative Cloud which will be a big feature as Adobe’s platform is the most widely used across the creative industries.

Character.AI unveils AvatarFX, an AI video model to create lifelike chatbots

I haven’t written much about Character.AI since August last year when their CEO left to return to Google mostly because there hasn’t been much news. So it’s interesting to see this new release from them that will allow users to animate their characters in a variety of styles and voices.

There are plenty of issues with this though, as the platform could be used to create deepfakes with just a photo. To try and combat this, Character.ai will apply watermarks to the videos to make it clear that the footage isn’t real and they will block the creation of videos of minors as well as try to filter images of real people to make them less recognisable.

Character.ai has been a controversial platform since its launch a few years ago, and is currently facing lots of safety issues surrounding its technology where users have formed emotional attachments to their characters. It’s an interesting area to keep an eye on as it might help us better understand our relationship with technology as it becomes more human-like.

AI Ethics News

This tool estimates how much electricity your chatbot messages consume

Within six years, building the leading AI data center may cost $200B

Collective licence to ensure UK authors get paid for works used to train AI

Public comments to White House on AI policy touch on copyright, tariffs

OpenAI’s GPT-4.1 may be less aligned than the company’s previous AI models

An AI-generated radio host in Australia went unnoticed for months

OpenAI seeks to make its upcoming ‘open’ AI model best-in-class

Crowdsourced AI benchmarks have serious flaws, some experts say

Long Reads

Simon Willison - Watching o3 guess a photo’s location is surreal, dystopian and wildly entertaining

Dario Amodei - The Urgency of Interpretability

Simon Willison - AI assisted search-based research actually works now

Stratechery - Apple and the Ghosts of Companies Past

TED 2025 - Sam Altman Talks the Future of AI, Safety and Power

Velvet Shark - Why do AI Company Logos Look Like Buttholes?

“The future is already here, it’s just not evenly distributed.“

William Gibson